Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Welcome to the Haruko digital assets knowledge portal.

We are excited to share a curated database of the best crypto thought pieces and resources, which we hope will be helpful in your digital asset journey.

Let's jump in

lll

lll

DLT consensus

PoS vs. PoW

https://dirtroads.substack.com/p/-43-first-principles-of-crypto-governance?r=k87cd&s=w&utm_campaign=post&utm_medium=web

https://cdixon.org/2018/02/18/why-decentralization-matters

Chris Dixon, Feb 2018

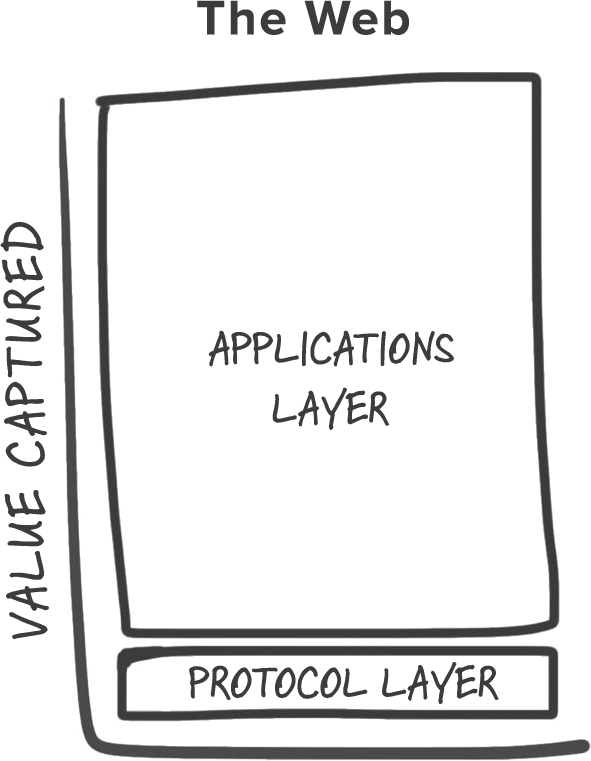

During the first era of the internet — from the 1980s through the early 2000s — internet services were built on open protocols that were controlled by the internet community. This meant that people or organizations could grow their internet presence knowing the rules of the game wouldn’t change later on. Huge web properties were started during this era including Yahoo, Google, Amazon, Facebook, LinkedIn, and YouTube. In the process, the importance of centralized platforms like AOL greatly diminished.

During the second era of the internet, from the mid 2000s to the present, for-profit tech companies — most notably Google, Apple, Facebook, and Amazon (GAFA) — built software and services that rapidly outpaced the capabilities of open protocols. The explosive growth of smartphones accelerated this trend as mobile apps became the majority of internet use. Eventually users migrated from open services to these more sophisticated, centralized services. Even when users still accessed open protocols like the web, they would typically do so mediated by GAFA software and services.

The good news is that billions of people got access to amazing technologies, many of which were free to use. The bad news is that it became much harder for startups, creators, and other groups to grow their internet presence without worrying about centralized platforms changing the rules on them, taking away their audiences and profits. This in turn stifled innovation, making the internet less interesting and dynamic. Centralization has also created broader societal tensions, which we see in the debates over subjects like fake news, state sponsored bots, “no platforming” of users, EU privacy laws, and algorithmic biases. These debates will only intensify in the coming years.

One response to this centralization is to impose government regulation on large internet companies. This response assumes that the internet is similar to past communication networks like the phone, radio, and TV networks. But the hardware-based networks of the past are fundamentally different than the internet, a software-based network. Once hardware-based networks are built, they are nearly impossible to rearchitect. Software-based networks can be rearchitected through entrepreneurial innovation and market forces.

The internet is the ultimate software-based network, consisting of a relatively simple core layer connecting billions of fully programmable computers at the edge. Software is simply the encoding of human thought, and as such has an almost unbounded design space. Computers connected to the internet are, by and large, free to run whatever software their owners choose. Whatever can be dreamt up, with the right set of incentives, can quickly propagate across the internet. Internet architecture is where technical creativity and incentive design intersect.

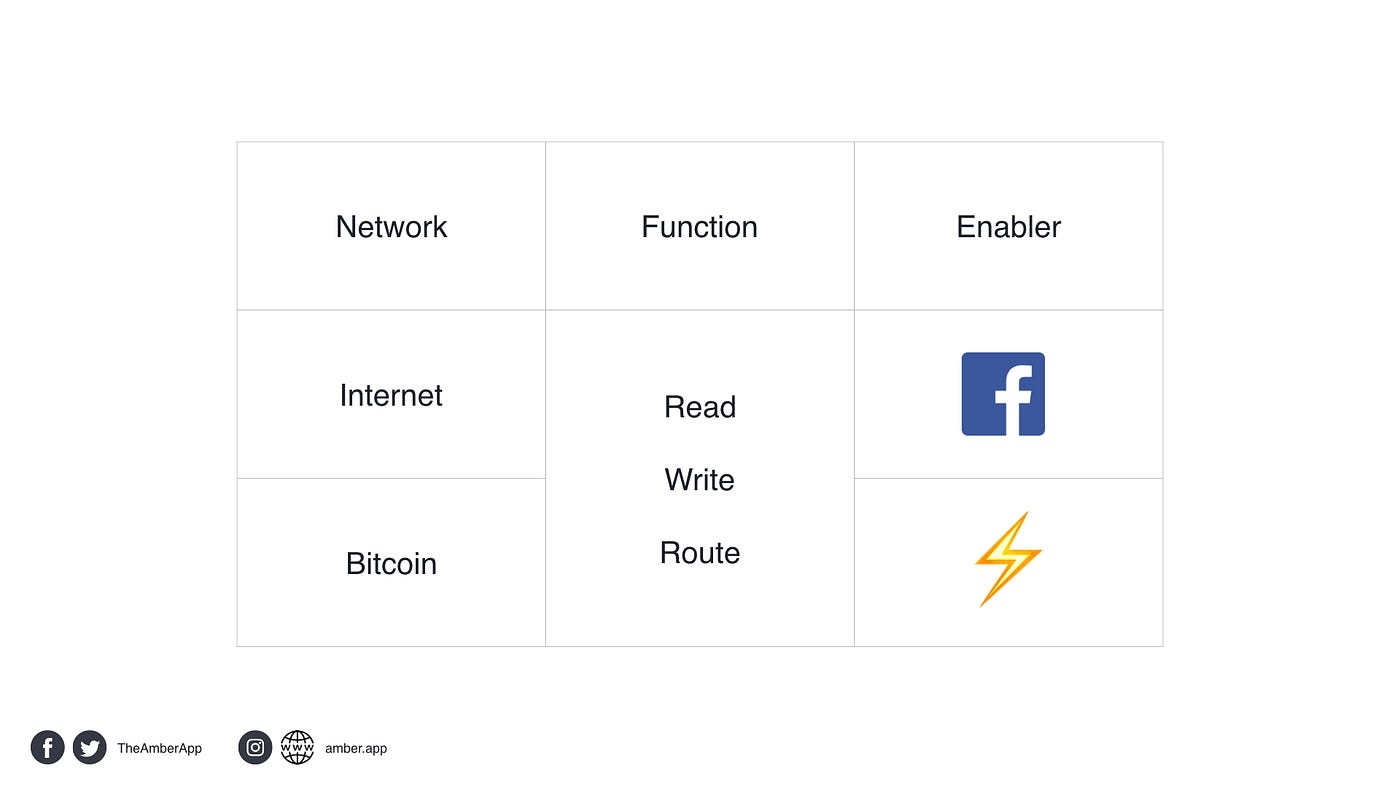

The internet is still early in its evolution: the core internet services will likely be almost entirely rearchitected in the coming decades. This will be enabled by crypto-economic networks, a generalization of the ideas first introduced in Bitcoin and further developed in Ethereum. Cryptonetworks combine the best features of the first two internet eras: community-governed, decentralized networks with capabilities that will eventually exceed those of the most advanced centralized services.

Decentralization is a commonly misunderstood concept. For example, it is sometimes said that the reason cryptonetwork advocates favor decentralization is to resist government censorship, or because of libertarian political views. These are not the main reasons decentralization is important.

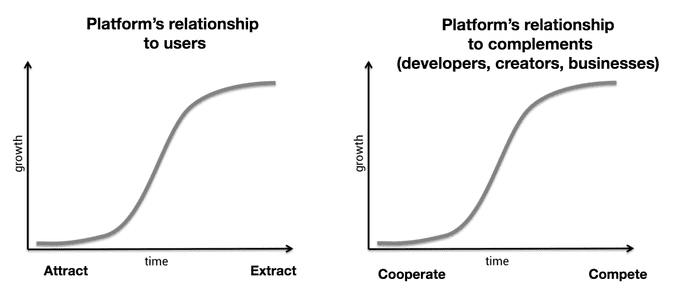

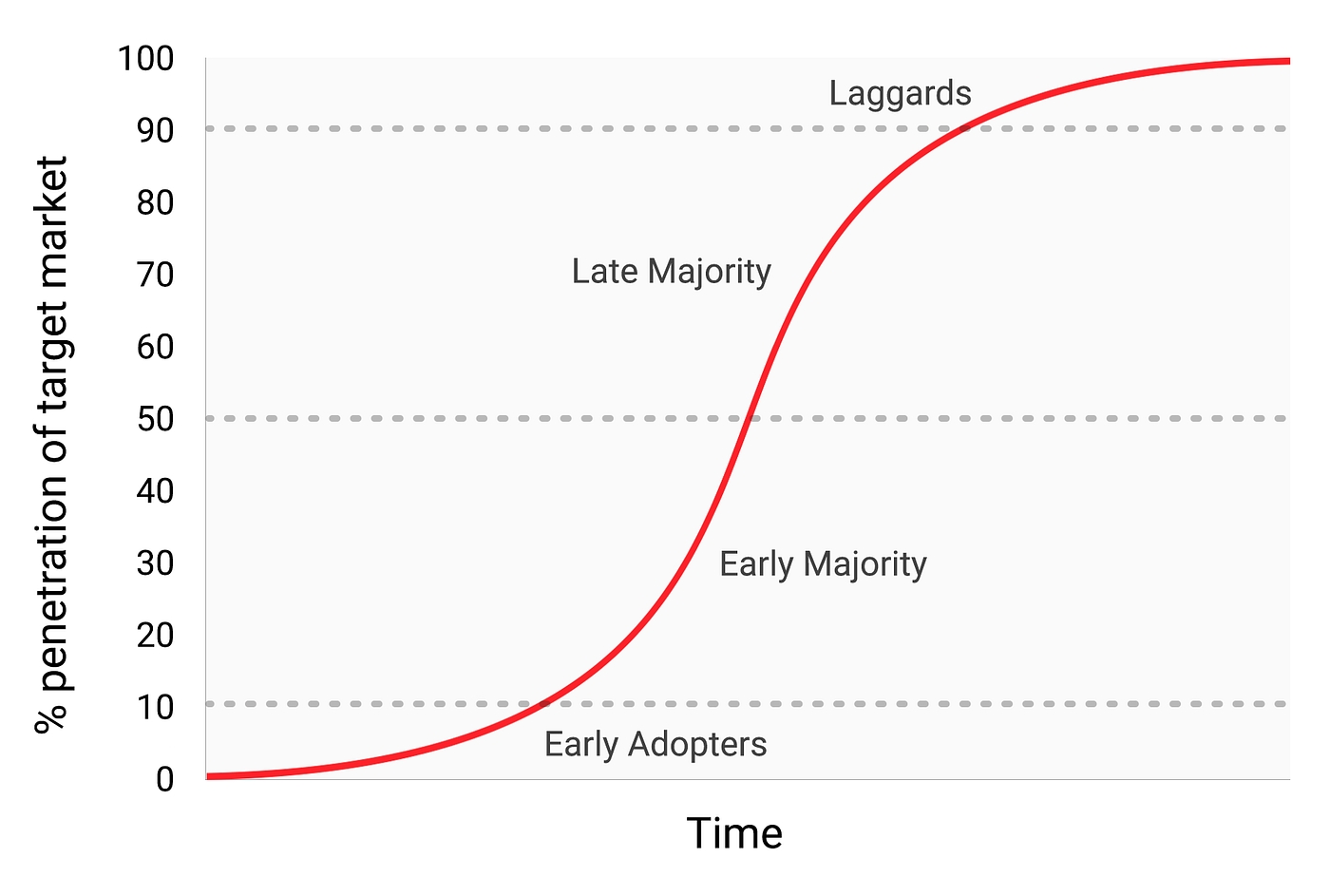

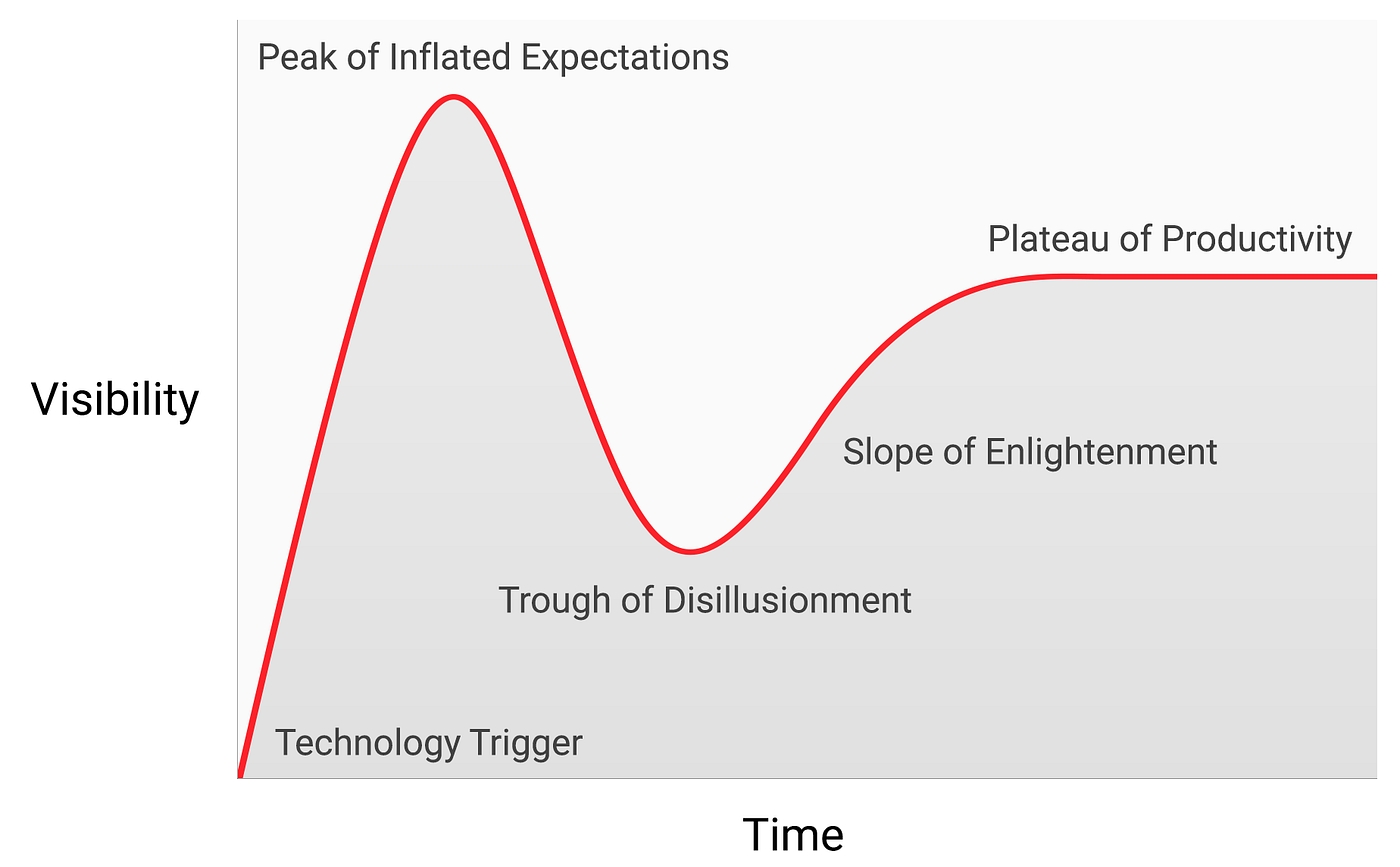

Let’s look at the problems with centralized platforms. Centralized platforms follow a predictable life cycle. When they start out, they do everything they can to recruit users and 3rd-party complements like developers, businesses, and media organizations. They do this to make their services more valuable, as platforms (by definition) are systems with multi-sided network effects. As platforms move up the adoption S-curve, their power over users and 3rd parties steadily grows.

When they hit the top of the S-curve, their relationships with network participants change from positive-sum to zero-sum. The easiest way to continue growing lies in extracting data from users and competing with complements over audiences and profits. Historical examples of this are Microsoft vs. Netscape, Google vs. Yelp, Facebook vs. Zynga, and Twitter vs. its 3rd-party clients. Operating systems like iOS and Android have behaved better, although still take a healthy 30% tax, reject apps for seemingly arbitrary reasons, and subsume the functionality of 3rd-party apps at will.

For 3rd parties, this transition from cooperation to competition feels like a bait-and-switch. Over time, the best entrepreneurs, developers, and investors have become wary of building on top of centralized platforms. We now have decades of evidence that doing so will end in disappointment. In addition, users give up privacy, control of their data, and become vulnerable to security breaches. These problems with centralized platforms will likely become even more pronounced in the future.

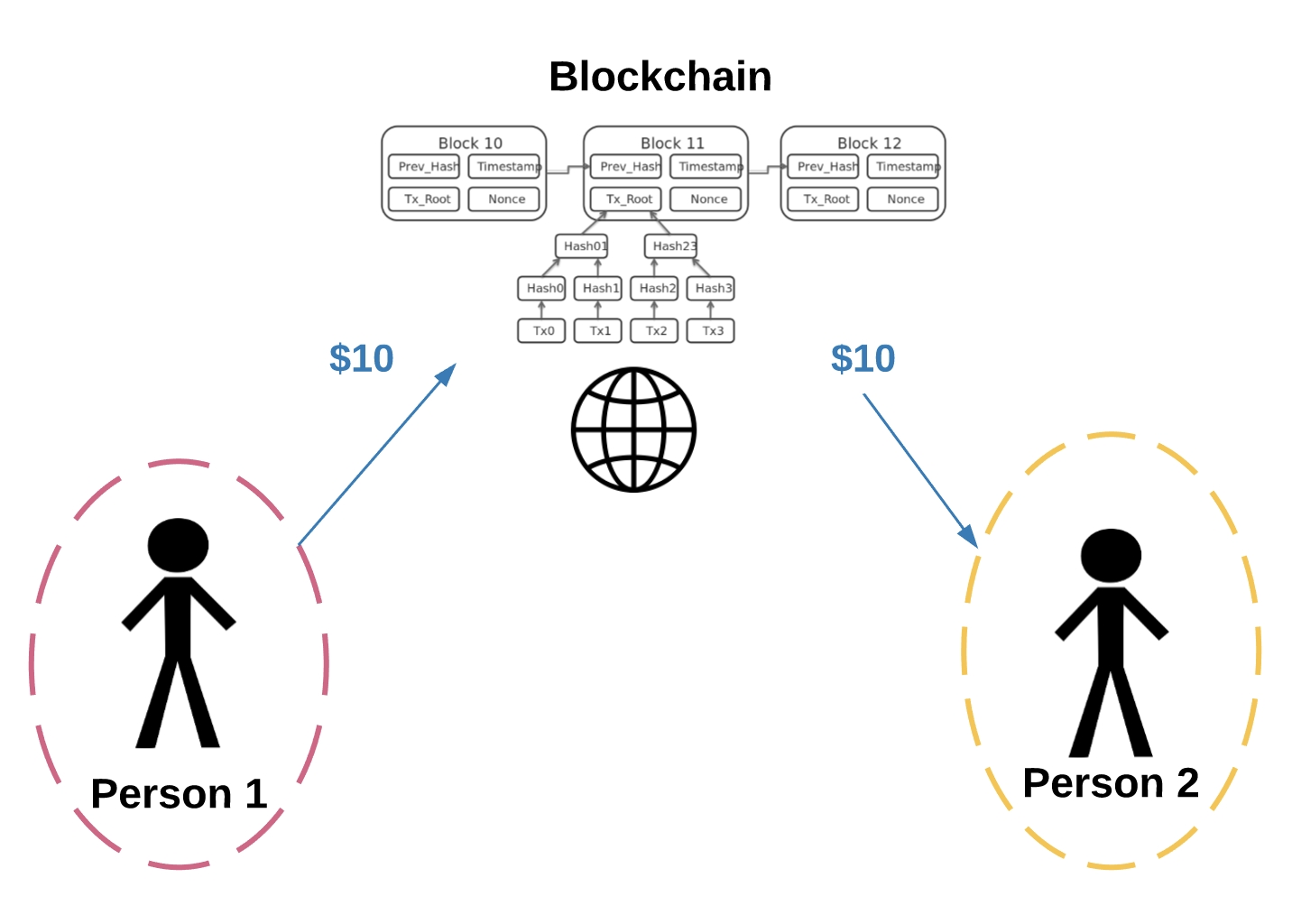

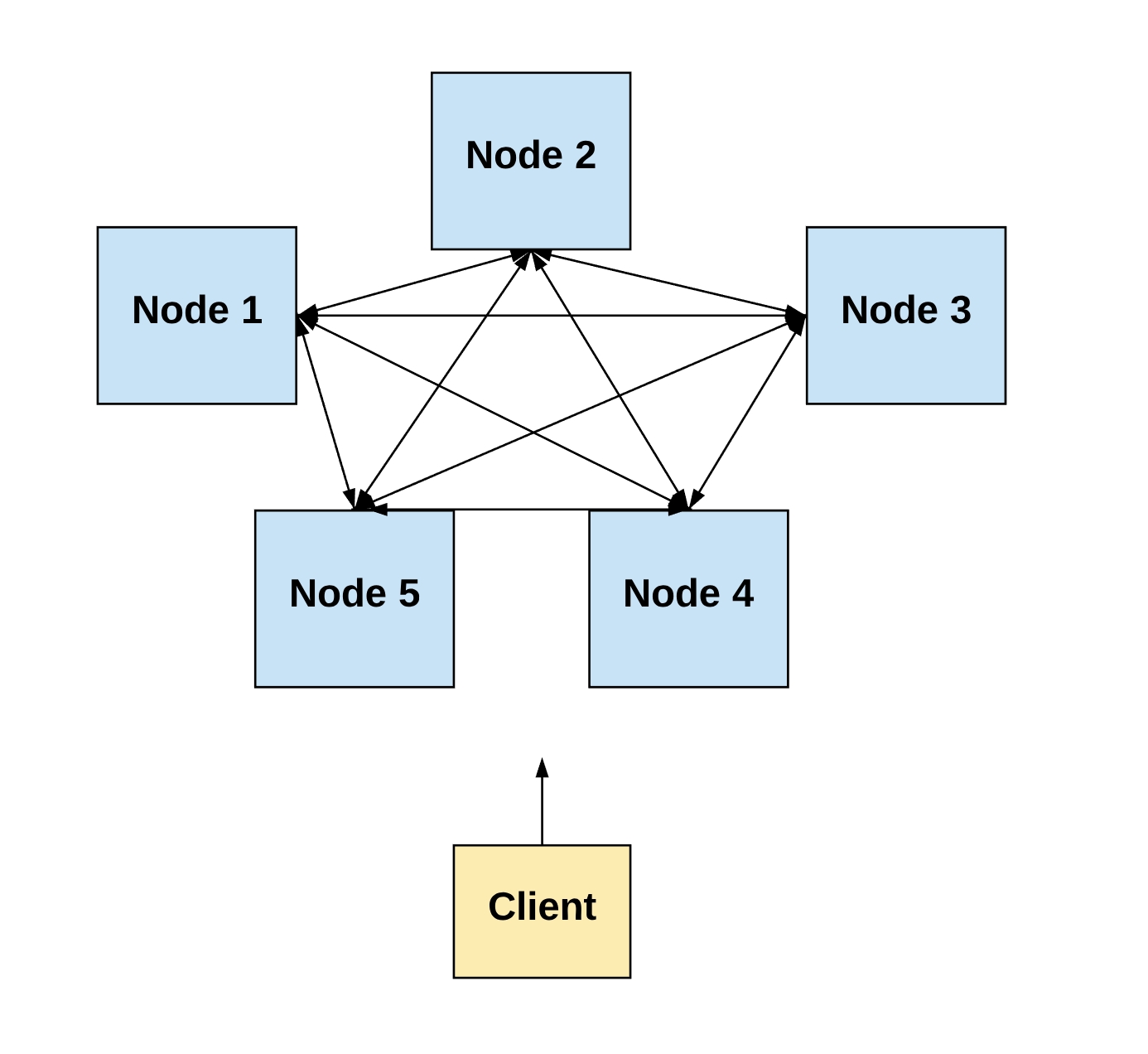

Cryptonetworks are networks built on top of the internet that 1) use consensus mechanisms such as blockchains to maintain and update state, 2) use cryptocurrencies (coins/tokens) to incentivize consensus participants (miners/validators) and other network participants. Some cryptonetworks, such as Ethereum, are general programming platforms that can be used for almost any purpose. Other cryptonetworks are special purpose, for example Bitcoin is intended primarily for storing value, Golem for performing computations, and Filecoin for decentralized file storage.

Early internet protocols were technical specifications created by working groups or non-profit organizations that relied on the alignment of interests in the internet community to gain adoption. This method worked well during the very early stages of the internet but since the early 1990s very few new protocols have gained widespread adoption. Cryptonetworks fix these problems by providing economics incentives to developers, maintainers, and other network participants in the form of tokens. They are also much more technically robust. For example, they are able to keep state and do arbitrary transformations on that state, something past protocols could never do.

Cryptonetworks use multiple mechanisms to ensure that they stay neutral as they grow, preventing the bait-and-switch of centralized platforms. First, the contract between cryptonetworks and their participants is enforced in open source code. Second, they are kept in check through mechanisms for “voice” and “exit.” Participants are given voice through community governance, both “on chain” (via the protocol) and “off chain” (via the social structures around the protocol). Participants can exit either by leaving the network and selling their coins, or in the extreme case by forking the protocol.

In short, cryptonetworks align network participants to work together toward a common goal — the growth of the network and the appreciation of the token. This alignment is one of the main reasons Bitcoin continues to defy skeptics and flourish, even while new cryptonetworks like Ethereum have grown alongside it.

Today’s cryptonetworks suffer from limitations that keep them from seriously challenging centralized incumbents. The most severe limitations are around performance and scalability. The next few years will be about fixing these limitations and building networks that form the infrastructure layer of the crypto stack. After that, most of the energy will turn to building applications on top of that infrastructure.

It’s one thing to say decentralized networks should win, and another thing to say they will win. Let’s look at specific reasons to be optimistic about this.

Software and web services are built by developers. There are millions of highly skilled developers in the world. Only a small fraction work at large technology companies, and only a small fraction of those work on new product development. Many of the most important software projects in history were created by startups or by communities of independent developers.

“No matter who you are, most of the smartest people work for someone else.” — Bill Joy

Decentralized networks can win the third era of the internet for the same reason they won the first era: by winning the hearts and minds of entrepreneurs and developers.

An illustrative analogy is the rivalry in the 2000s between Wikipedia and its centralized competitors like Encarta. If you compared the two products in the early 2000s, Encarta was a far better product, with better topic coverage and higher accuracy. But Wikipedia improved at a much faster rate, because it had an active community of volunteer contributors who were attracted to its decentralized, community-governed ethos. By 2005, Wikipedia was the most popular reference site on the internet. Encarta was shut down in 2009.

The lesson is that when you compare centralized and decentralized systems you need to consider them dynamically, as processes, instead of statically, as rigid products. Centralized systems often start out fully baked, but only get better at the rate at which employees at the sponsoring company improve them. Decentralized systems start out half-baked but, under the right conditions, grow exponentially as they attract new contributors.

In the case of cryptonetworks, there are multiple, compounding feedback loops involving developers of the core protocol, developers of complementary cryptonetworks, developers of 3rd party applications, and service providers who operate the network. These feedback loops are further amplified by the incentives of the associated token, which — as we’ve seen with Bitcoin and Ethereum — can supercharge the rate at which crypto communities develop (and sometimes lead to negative outcomes, as with the excessive electricity consumed by Bitcoin mining).

The question of whether decentralized or centralized systems will win the next era of the internet reduces to who will build the most compelling products, which in turn reduces to who will get more high quality developers and entrepreneurs on their side. GAFA has many advantages, including cash reserves, large user bases, and operational infrastructure. Cryptonetworks have a significantly more attractive value proposition to developers and entrepreneurs. If they can win their hearts and minds, they can mobilize far more resources than GAFA, and rapidly outpace their product development.

“If you asked people in 1989 what they needed to make their life better, it was unlikely that they would have said a decentralized network of information nodes that are linked using hypertext.” — Farmer & Farmer

Centralized platforms often come bundled at launch with compelling apps: Facebook had its core socializing features and the iPhone had a number of key apps. Decentralized platforms, by contrast, often launch half-baked and without clear use cases. As a result, they need to go through two phases of product-market fit: 1) product-market fit between the platform and the developers/entrepreneurs who will finish the platform and build out the ecosystem, and 2) product-market fit between the platform/ecosystem and end users. This two-stage process is what causes many people — including sophisticated technologists — to consistently underestimate the potential of decentralized platforms.

Decentralized networks aren’t a silver bullet that will fix all the problems on the internet. But they offer a much better approach than centralized systems.

Compare the problem of Twitter spam to the problem of email spam. Since Twitter closed their network to 3rd-party developers, the only company working on Twitter spam has been Twitter itself. By contrast, there were hundreds of companies that tried to fight email spam, financed by billions of dollars in venture capital and corporate funding. Email spam isn’t solved, but it’s a lot better now, because 3rd parties knew that the email protocol was decentralized, so they could build businesses on top of it without worrying about the rules of the game changing later on.

Or consider the problem of network governance. Today, unaccountable groups of employees at large platforms decide how information gets ranked and filtered, which users get promoted and which get banned, and other important governance decisions. In cryptonetworks, these decisions are made by the community, using open and transparent mechanisms. As we know from the offline world, democratic systems aren’t perfect, but they are a lot better than the alternatives.

Centralized platforms have been dominant for so long that many people have forgotten there is a better way to build internet services. Cryptonetworks are a powerful way to develop community-owned networks and provide a level playing field for 3rd-party developers, creators, and businesses. We saw the value of decentralized systems in the first era of the internet. Hopefully we’ll get to see it again in the next.

Originally published on Medium.

Next post: Who will control the software that powers the Internet?Previous post: Crypto token roundup

VIEWS EXPRESSED IN “CONTENT” (INCLUDING POSTS, PODCASTS, VIDEOS) LINKED ON THIS WEBSITE OR POSTED IN SOCIAL MEDIA AND OTHER PLATFORMS (COLLECTIVELY, “CONTENT DISTRIBUTION OUTLETS”) ARE MY OWN AND ARE NOT THE VIEWS OF AH CAPITAL MANAGEMENT, L.L.C. (“A16Z”) OR ITS RESPECTIVE AFFILIATES. AH CAPITAL MANAGEMENT IS AN INVESTMENT ADVISER REGISTERED WITH THE SECURITIES AND EXCHANGE COMMISSION. REGISTRATION AS AN INVESTMENT ADVISER DOES NOT IMPLY ANY SPECIAL SKILL OR TRAINING. THE POSTS ARE NOT DIRECTED TO ANY INVESTORS OR POTENTIAL INVESTORS, AND DO NOT CONSTITUTE AN OFFER TO SELL -- OR A SOLICITATION OF AN OFFER TO BUY -- ANY SECURITIES, AND MAY NOT BE USED OR RELIED UPON IN EVALUATING THE MERITS OF ANY INVESTMENT.

THE CONTENT SHOULD NOT BE CONSTRUED AS OR RELIED UPON IN ANY MANNER AS INVESTMENT, LEGAL, TAX, OR OTHER ADVICE. YOU SHOULD CONSULT YOUR OWN ADVISERS AS TO LEGAL, BUSINESS, TAX, AND OTHER RELATED MATTERS CONCERNING ANY INVESTMENT. ANY PROJECTIONS, ESTIMATES, FORECASTS, TARGETS, PROSPECTS AND/OR OPINIONS EXPRESSED IN THESE MATERIALS ARE SUBJECT TO CHANGE WITHOUT NOTICE AND MAY DIFFER OR BE CONTRARY TO OPINIONS EXPRESSED BY OTHERS. ANY CHARTS PROVIDED HERE ARE FOR INFORMATIONAL PURPOSES ONLY, AND SHOULD NOT BE RELIED UPON WHEN MAKING ANY INVESTMENT DECISION. CERTAIN INFORMATION CONTAINED IN HERE HAS BEEN OBTAINED FROM THIRD-PARTY SOURCES. WHILE TAKEN FROM SOURCES BELIEVED TO BE RELIABLE, I HAVE NOT INDEPENDENTLY VERIFIED SUCH INFORMATION AND MAKES NO REPRESENTATIONS ABOUT THE ENDURING ACCURACY OF THE INFORMATION OR ITS APPROPRIATENESS FOR A GIVEN SITUATION. THE CONTENT SPEAKS ONLY AS OF THE DATE INDICATED.

UNDER NO CIRCUMSTANCES SHOULD ANY POSTS OR OTHER INFORMATION PROVIDED ON THIS WEBSITE -- OR ON ASSOCIATED CONTENT DISTRIBUTION OUTLETS -- BE CONSTRUED AS AN OFFER SOLICITING THE PURCHASE OR SALE OF ANY SECURITY OR INTEREST IN ANY POOLED INVESTMENT VEHICLE SPONSORED, DISCUSSED, OR MENTIONED BY A16Z PERSONNEL. NOR SHOULD IT BE CONSTRUED AS AN OFFER TO PROVIDE INVESTMENT ADVISORY SERVICES; AN OFFER TO INVEST IN AN A16Z-MANAGED POOLED INVESTMENT VEHICLE WILL BE MADE SEPARATELY AND ONLY BY MEANS OF THE CONFIDENTIAL OFFERING DOCUMENTS OF THE SPECIFIC POOLED INVESTMENT VEHICLES -- WHICH SHOULD BE READ IN THEIR ENTIRETY, AND ONLY TO THOSE WHO, AMONG OTHER REQUIREMENTS, MEET CERTAIN QUALIFICATIONS UNDER FEDERAL SECURITIES LAWS. SUCH INVESTORS, DEFINED AS ACCREDITED INVESTORS AND QUALIFIED PURCHASERS, ARE GENERALLY DEEMED CAPABLE OF EVALUATING THE MERITS AND RISKS OF PROSPECTIVE INVESTMENTS AND FINANCIAL MATTERS. THERE CAN BE NO ASSURANCES THAT A16Z’S INVESTMENT OBJECTIVES WILL BE ACHIEVED OR INVESTMENT STRATEGIES WILL BE SUCCESSFUL. ANY INVESTMENT IN A VEHICLE MANAGED BY A16Z INVOLVES A HIGH DEGREE OF RISK INCLUDING THE RISK THAT THE ENTIRE AMOUNT INVESTED IS LOST. ANY INVESTMENTS OR PORTFOLIO COMPANIES MENTIONED, REFERRED TO, OR DESCRIBED ARE NOT REPRESENTATIVE OF ALL INVESTMENTS IN VEHICLES MANAGED BY A16Z AND THERE CAN BE NO ASSURANCE THAT THE INVESTMENTS WILL BE PROFITABLE OR THAT OTHER INVESTMENTS MADE IN THE FUTURE WILL HAVE SIMILAR CHARACTERISTICS OR RESULTS. A LIST OF INVESTMENTS MADE BY FUNDS MANAGED BY A16Z IS AVAILABLE AT HTTPS://A16Z.COM/INVESTMENTS/. EXCLUDED FROM THIS LIST ARE INVESTMENTS FOR WHICH THE ISSUER HAS NOT PROVIDED PERMISSION FOR A16Z TO DISCLOSE PUBLICLY AS WELL AS UNANNOUNCED INVESTMENTS IN PUBLICLY TRADED DIGITAL ASSETS. PAST RESULTS OF ANDREESSEN HOROWITZ’S INVESTMENTS, POOLED INVESTMENT VEHICLES, OR INVESTMENT STRATEGIES ARE NOT NECESSARILY INDICATIVE OF FUTURE RESULTS. PLEASE SEE HTTPS://A16Z.COM/DISCLOSURES FOR ADDITIONAL IMPORTANT INFORMATION.

https://medium.com/@VitalikButerin/the-meaning-of-decentralization-a0c92b76a274

Vitalik Buterin, Feb 2017

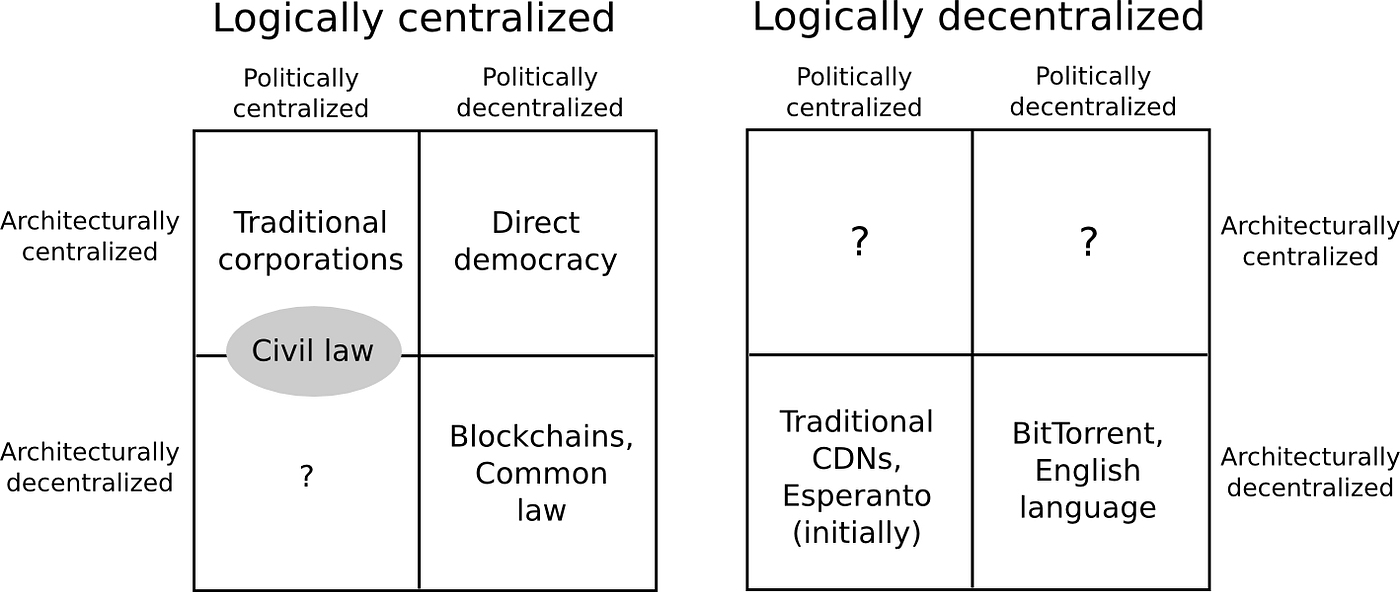

“Decentralization” is one of the words that is used in the cryptoeconomics space the most frequently, and is often even viewed as a blockchain’s entire raison d’être, but it is also one of the words that is perhaps defined the most poorly. Thousands of hours of research, and billions of dollars of hashpower, have been spent for the sole purpose of attempting to achieve decentralization, and to protect and improve it, and when discussions get rivalrous it is extremely common for proponents of one protocol (or protocol extension) to claim that the opposing proposals are “centralized” as the ultimate knockdown argument.

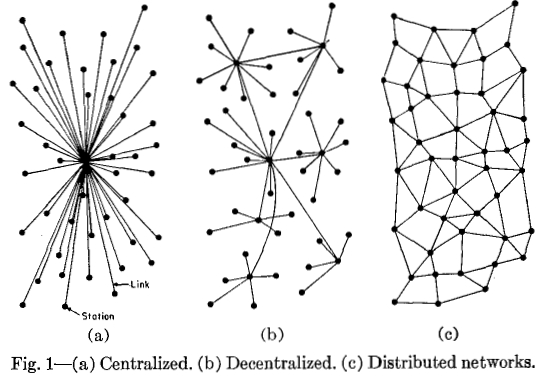

But there is often a lot of confusion as to what this word actually means. Consider, for example, the following completely unhelpful, but unfortunately all too common, diagram:

Now, consider the two answers on Quora for “what is the difference between distributed and decentralized”. The first essentially parrots the above diagram, whereas the second makes the entirely different claim that “distributed means not all the processing of the transactions is done in the same place”, whereas “decentralized means that not one single entity has control over all the processing”. Meanwhile, the top answer on the Ethereum stack exchange gives a very similar diagram, but with the words “decentralized” and “distributed” switched places! Clearly, a clarification is in order.

When people talk about software decentralization, there are actually three separate axes of centralization/decentralization that they may be talking about. While in some cases it is difficult to see how you can have one without the other, in general they are quite independent of each other. The axes are as follows:

Architectural (de)centralization — how many physical computers is a system made up of? How many of those computers can it tolerate breaking down at any single time?

Political (de)centralization — how many individuals or organizations ultimately control the computers that the system is made up of?

Logical (de)centralization— does the interface and data structures that the system presents and maintains look more like a single monolithic object, or an amorphous swarm? One simple heuristic is: if you cut the system in half, including both providers and users, will both halves continue to fully operate as independent units?

We can try to put these three dimensions into a chart:

Note that a lot of these placements are very rough and highly debatable. But let’s try going through any of them:

Traditional corporations are politically centralized (one CEO), architecturally centralized (one head office) and logically centralized (can’t really split them in half)

Civil law relies on a centralized law-making body, whereas common law is built up of precedent made by many individual judges. Civil law still has some architectural decentralization as there are many courts that nevertheless have large discretion, but common law have more of it. Both are logically centralized (“the law is the law”).

Languages are logically decentralized; the English spoken between Alice and Bob and the English spoken between Charlie and David do not need to agree at all. There is no centralized infrastructure required for a language to exist, and the rules of English grammar are not created or controlled by any one single person (whereas Esperanto was originally invented by , though now it functions more like a living language that evolves incrementally with no authority)

Many times when people talk about the virtues of a blockchain, they describe the convenience benefits of having “one central database”; that centralization is logical centralization, and it’s a kind of centralization that is arguably in many cases good (though Juan Benet from IPFS would also push for logical decentralization wherever possible, because logically decentralized systems tend to be good at surviving network partitions, work well in regions of the world that have poor connectivity, etc; see also explicitly advocating logical decentralization).

Architectural centralization often leads to political centralization, though not necessarily — in a formal democracy, politicians meet and hold votes in some physical governance chamber, but the maintainers of this chamber do not end up deriving any substantial amount of power over decision-making as a result. In computerized systems, architectural but not political decentralization might happen if there is an online community which uses a centralized forum for convenience, but where there is a widely agreed social contract that if the owners of the forum act maliciously then everyone will move to a different forum (communities that are formed around rebellion against what they see as censorship in another forum likely have this property in practice).

Logical centralization makes architectural decentralization harder, but not impossible — see how decentralized consensus networks have already been proven to work, but are more difficult than maintaining BitTorrent. And logical centralization makes political decentralization harder — in logically centralized systems, it’s harder to by simply agreeing to “live and let live”.

The next question is, why is decentralization useful in the first place? There are generally several arguments raised:

Fault tolerance— decentralized systems are less likely to fail accidentally because they rely on many separate components that are not likely.

Attack resistance— decentralized systems are more expensive to attack and destroy or manipulate because they lack that can be attacked at much lower cost than the economic size of the surrounding system.

Collusion resistance — it is much harder for participants in decentralized systems to collude to act in ways that benefit them at the expense of other participants, whereas the leaderships of corporations and governments collude in ways that benefit themselves but harm less well-coordinated citizens, customers, employees and the general public all the time.

All three arguments are important and valid, but all three arguments lead to some interesting and different conclusions once you start thinking about protocol decisions with the three individual perspectives in mind. Let us try to expand out each of these arguments one by one.

Regarding fault tolerance, the core argument is simple. What’s less likely to happen: one single computer failing, or five out of ten computers all failing at the same time? The principle is uncontroversial, and is used in real life in many situations, including jet engines, particularly in places like hospitals, military infrastructure, financial portfolio diversification, and yes, computer networks.

However, this kind of decentralization, while still effective and highly important, often turns out to be far less of a panacea than a naive mathematical model would sometimes predict. The reason is . Sure, four jet engines are less likely to fail than one jet engine, but what if all four engines were made in the same factory, and a fault was introduced in all four by the same rogue employee?

Do blockchains as they are today manage to protect against common mode failure? Not necessarily. Consider the following scenarios:

All nodes in a blockchain run the same client software, and this client software turns out to have a bug.

All nodes in a blockchain run the same client software, and the development team of this software turns out to be socially corrupted.

The research team that is proposing protocol upgrades turns out to be socially corrupted.

A holistic view of fault tolerance decentralization would look at all of these aspects, and see how they can be minimized. Some natural conclusions that arise are fairly obvious:

It is crucially important to have .

The of the be , so that more people can feel comfortable in research discussions and criticizing protocol changes that are clearly bad.

Core developers and researchers should be employed by

Note that the fault tolerance requirement in its naive form focuses on architectural decentralization, but once you start thinking about fault tolerance of the community that governs the protocol’s ongoing development, then political decentralization is important too.

Now, let’s look at attack resistance. In some pure economic models, you sometimes get the result that decentralization does not even matter. If you create a protocol where the validators are guaranteed to lose $50 million if a 51% attack (ie. finality reversion) happens, then it doesn’t really matter if the validators are controlled by one company or 100 companies — $50 million economic security margin is $50 million economic security margin. In fact, there are why centralization may even maximize this notion of economic security (the transaction selection model of existing blockchains reflects this insight, as transaction inclusion into blocks through miners/block proposers is actually a very rapidly rotating dictatorship).

However, once you adopt a richer economic model, and particularly one that admits the possibility of coercion (or much milder things like targeted DoS attacks against nodes), decentralization becomes more important. If you threaten one person with death, suddenly $50 million will not matter to them as much anymore. But if the $50 million is spread between ten people, then you have to threaten ten times as many people, and do it all at the same time. In general, the modern world is in many cases characterized by an attack/defense asymmetry in favor of the attacker — a building that costs $10 million to build may cost less than $100,000 to destroy, but the attacker’s leverage is often sublinear: if a building that costs $10 million to build costs $100,000 to destroy, a building that costs $1 million to build may realistically cost perhaps $30,000 to destroy. Smaller gives better ratios.

What does this reasoning lead to? First of all, it pushes strongly in favor of proof of stake over proof of work, as computer hardware is easy to detect, regulate, or attack, whereas coins can be much more easily hidden (proof of stake also has strong attack resistance ). Second, it is a point in favor of having widely distributed development teams, including geographic distribution. Third, it implies that both the economic model and the fault-tolerance model need to be looked at when designing consensus protocols.

Finally, we can get to perhaps the most intricate argument of the three, collusion resistance. Collusion is difficult to define; perhaps the only truly valid way to put it is to simply say that collusion is “coordination that we don’t like”. There are many situations in real life where even though having perfect coordination between everyone would be ideal, one sub-group being able to coordinate while the others cannot is dangerous.

One simple example is antitrust law — deliberate regulatory barriers that get placed in order to make it more difficult for participants on one side of the marketplace to come together and act like a monopolist and get outsided profits at the expense of both the other side of the marketplace and general social welfare. Another example is , though those have proven difficult to enforce in practice. A much smaller example is a rule in preventing two players from playing many games against each other to try to raise one player’s score. No matter where you look, attempts to prevent undesired coordination in sophisticated institutions are everywhere.

In the case of blockchain protocols, the mathematical and economic reasoning behind the safety of the consensus often relies crucially on the uncoordinated choice model, or the assumption that the game consists of many small actors that make decisions independently. If any one actor gets more than 1/3 of the mining power in a proof of work system, they can gain outsized profits by . However, can we really say that the uncoordinated choice model is realistic when 90% of the Bitcoin network’s mining power is well-coordinated enough to show up together at the same conference?

Blockchain advocates also make the point that blockchains are more secure to build on because they can’t just change their rules arbitrarily on a whim whenever they want to, but this case would be difficult to defend if the developers of the software and protocol were all working for one company, were part of one family and sat in one room. The whole point is that these systems should not act like self-interested unitary monopolies. Hence, you can certainly make a case that blockchains would be more secure if they were more discoordinated.

However, this presents a fundamental paradox. Many communities, including Ethereum’s, are often praised for having a strong community spirit and being able to coordinate quickly on implementing, releasing and to fix denial-of-service issues in the protocol within six days. But how can we foster and improve this good kind of coordination, but at the same time prevent “bad coordination” that consists of miners trying to screw everyone else over by repeatedly coordinating 51% attacks?

There are three ways to answer this:

Don’t bother mitigating undesired coordination; instead, try to build protocols that can resist it.

Try to find a happy medium that allows enough coordination for a protocol to evolve and move forward, but not enough to enable attacks.

Try to make a distinction between beneficial coordination and harmful coordination, and make the former easier and the latter harder.

The first approach makes up a of the Casper design philosophy. However, it by itself is insufficient, as relying on economics alone fails to deal with the other two categories of concerns about decentralization. The second is difficult to engineer explicitly, especially for the long term, but it does often happen accidentally. For example, the fact that bitcoin’s core developers generally speak English but miners generally speak Chinese can be viewed as a happy accident, as it creates a kind of “bicameral” governance that makes coordination more difficult, with the side benefit of reducing the risk of common mode failure, as the English and Chinese communities will reason at least somewhat separately due to distance and communication difficulties and are therefore less likely to both make the same mistake.

The third is a social challenge more than anything else; solutions in this regard may include:

Social interventions that try to increase participants’ loyalty to the community around the blockchain as a whole and substitute or discourage the possibility of the players on one side of a market becoming directly loyal to each other.

Promoting communication between different “sides of the market” in the same context, so as to reduce the possibility that either validators or developers or miners begin to see themselves as a “class” that must coordinate to defend their interests against other classes.

Designing the protocol in such a way as to reduce the incentive for validators/miners to engage in one-to-one “special relationships”, centralized relay networks and other similar super-protocol mechanisms.

This third kind of decentralization, decentralization as undesired-coordination-avoidance, is thus perhaps the most difficult to achieve, and tradeoffs are unavoidable. Perhaps the best solution may be to rely heavily on the one group that is guaranteed to be fairly decentralized: the protocol’s users.

https://www.activism.net/cypherpunk/manifesto.html

Eric Hughes, Mar 1993

Privacy is necessary for an open society in the electronic age. Privacy is not secrecy. A private matter is something one doesn't want the whole world to know, but a secret matter is something one doesn't want anybody to know. Privacy is the power to selectively reveal oneself to the world.

If two parties have some sort of dealings, then each has a memory of their interaction. Each party can speak about their own memory of this; how could anyone prevent it? One could pass laws against it, but the freedom of speech, even more than privacy, is fundamental to an open society; we seek not to restrict any speech at all. If many parties speak together in the same forum, each can speak to all the others and aggregate together knowledge about individuals and other parties. The power of electronic communications has enabled such group speech, and it will not go away merely because we might want it to.

Since we desire privacy, we must ensure that each party to a transaction have knowledge only of that which is directly necessary for that transaction. Since any information can be spoken of, we must ensure that we reveal as little as possible. In most cases personal identity is not salient. When I purchase a magazine at a store and hand cash to the clerk, there is no need to know who I am. When I ask my electronic mail provider to send and receive messages, my provider need not know to whom I am speaking or what I am saying or what others are saying to me; my provider only need know how to get the message there and how much I owe them in fees. When my identity is revealed by the underlying mechanism of the transaction, I have no privacy. I cannot here selectively reveal myself; I must always reveal myself.

https://www.theblock.co/post/114225/layer-1-platforms-a-framework-for-comparison

The Block, Aug 2021

The Block Research was commissioned by and the to create Layer-1 Platforms: A Framework for comparison, which provides a “look under the hood” at seven platforms: Algorand, Avalanche, Binance Smart Chain, Cosmos, Ethereum/Ethereum 2.0, Polkadot, and Solana. We assess their technical design, related ecosystem data, and qualitative factors such as key ecosystem members to get an understanding of how they differ. Having done this analysis, we draw some insights for what the future of the broader smart contract landscape could look like for years to come.

From explaining consensus algorithms in plain english to opining on the blockchain energy consumption debate to analyzing on-chain metrics, we cover a lot of ground in this report. Data, and lots of it, guides the way.

You can learn more about the report and complete the form below to download the report in its entirety.

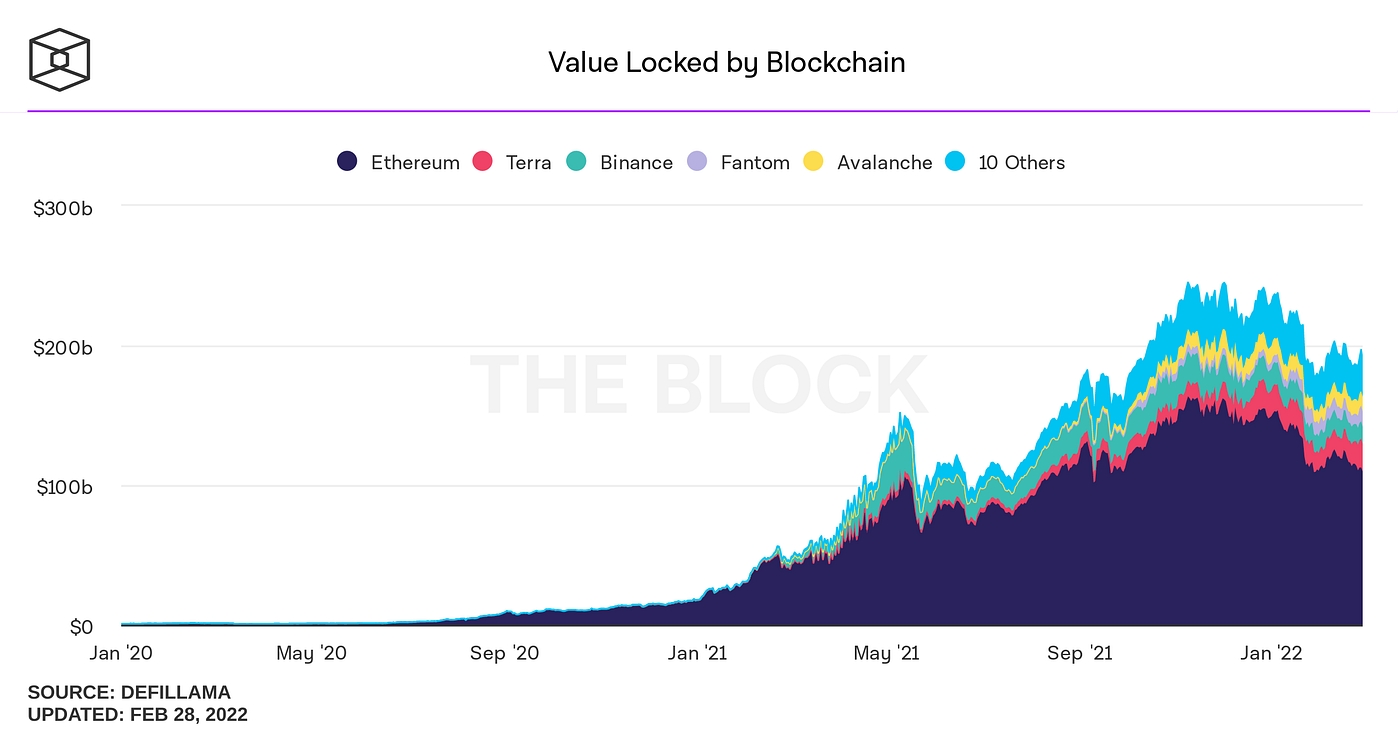

Six years after the inception of Ethereum, we have seen the revolutionary impact of general purpose blockchains on full display. Foremost, in the financial sphere with the rise of decentralized finance (DeFi). Secondly, in the cultural sphere with the explosion of activity around non-fungible tokens (NFTs).

Therefore, privacy in an open society requires anonymous transaction systems. Until now, cash has been the primary such system. An anonymous transaction system is not a secret transaction system. An anonymous system empowers individuals to reveal their identity when desired and only when desired; this is the essence of privacy.

Privacy in an open society also requires cryptography. If I say something, I want it heard only by those for whom I intend it. If the content of my speech is available to the world, I have no privacy. To encrypt is to indicate the desire for privacy, and to encrypt with weak cryptography is to indicate not too much desire for privacy. Furthermore, to reveal one's identity with assurance when the default is anonymity requires the cryptographic signature.

We cannot expect governments, corporations, or other large, faceless organizations to grant us privacy out of their beneficence. It is to their advantage to speak of us, and we should expect that they will speak. To try to prevent their speech is to fight against the realities of information. Information does not just want to be free, it longs to be free. Information expands to fill the available storage space. Information is Rumor's younger, stronger cousin; Information is fleeter of foot, has more eyes, knows more, and understands less than Rumor.

We must defend our own privacy if we expect to have any. We must come together and create systems which allow anonymous transactions to take place. People have been defending their own privacy for centuries with whispers, darkness, envelopes, closed doors, secret handshakes, and couriers. The technologies of the past did not allow for strong privacy, but electronic technologies do.

We the Cypherpunks are dedicated to building anonymous systems. We are defending our privacy with cryptography, with anonymous mail forwarding systems, with digital signatures, and with electronic money.

Cypherpunks write code. We know that someone has to write software to defend privacy, and since we can't get privacy unless we all do, we're going to write it. We publish our code so that our fellow Cypherpunks may practice and play with it. Our code is free for all to use, worldwide. We don't much care if you don't approve of the software we write. We know that software can't be destroyed and that a widely dispersed system can't be shut down.

Cypherpunks deplore regulations on cryptography, for encryption is fundamentally a private act. The act of encryption, in fact, removes information from the public realm. Even laws against cryptography reach only so far as a nation's border and the arm of its violence. Cryptography will ineluctably spread over the whole globe, and with it the anonymous transactions systems that it makes possible.

For privacy to be widespread it must be part of a social contract. People must come and together deploy these systems for the common good. Privacy only extends so far as the cooperation of one's fellows in society. We the Cypherpunks seek your questions and your concerns and hope we may engage you so that we do not deceive ourselves. We will not, however, be moved out of our course because some may disagree with our goals.

The Cypherpunks are actively engaged in making the networks safer for privacy. Let us proceed together apace.

Onward.

Eric Hughes <[email protected]>

9 March 1993

BitTorrent is logically decentralized similarly to how English is. Content delivery networks are similar, but are controlled by one single company.

Blockchains are politically decentralized (no one controls them) and architecturally decentralized (no infrastructural central point of failure) but they are logically centralized (there is one commonly agreed state and the system behaves like a single computer)

The majority of mining hardware is built by the same company, and this company gets bribed or coerced into implementing a backdoor that allows this hardware to be shut down at will.

In a proof of stake blockchain, 70% of the coins at stake are held at one exchange.

Mining algorithms should be designed in a way that minimizes the risk of centralization

Ideally we use proof of stake to move away from hardware centralization risk entirely (though we should also be cautious of new risks that pop up due to proof of stake).

Clear norms about what the fundamental properties that the protocol is supposed to have, and what kinds of things should not be done, or at least should be done only under very extreme circumstances.

But anyone who has been closely following knows that we have only scratched the surface of discovering what experiences general purpose blockchains are capable of delivering.

And while the term “Ethereum killer” has fallen out of favor, the pace of development in the Layer 1 platform arena has only accelerated over the past years. Dozens of platforms have emerged.

Some are seeking to offer an easily adoptable alternative to Ethereum and challenge its status as the de-facto choice for launching decentralized applications. Others are focused on giving developers the highest level of flexibility in building their own blockchains and creating cross-chain communication protocols.

With each passing year, a “one blockchain to rule them all” outcome fades further and further into the rearview. But analyzing these different platforms remains challenging.

They are surrounded by technical jargon. Digestible comparisons amongst them are few and far between. Yet they already compose a significant portion of the “investable crypto landscape” and are poised to support ecosystems orders of magnitude larger than what we have seen to date. Analyzing them will be an important task for years to come.

Analyzing smart contract platforms outside of the context of Ethereum is difficult. Analyzing Ethereum outside of the context of Bitcoin is equally difficult. So, this report starts with a brief introduction to Bitcoin before diving into the current state of Ethereum.

https://medium.com/coinmonks/stablecoin-primer-intro-54689d6fcdba

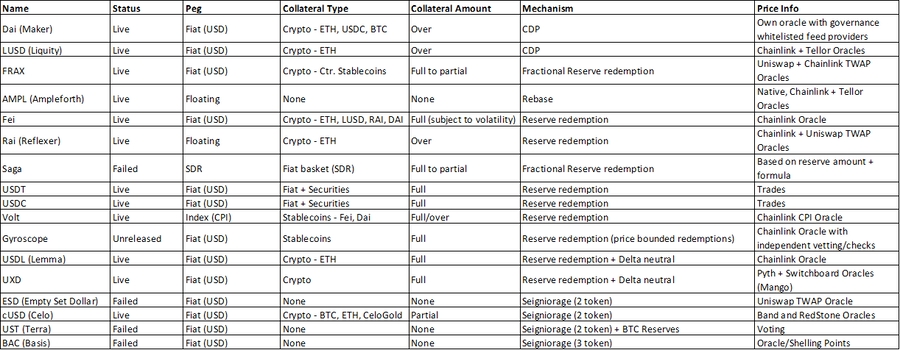

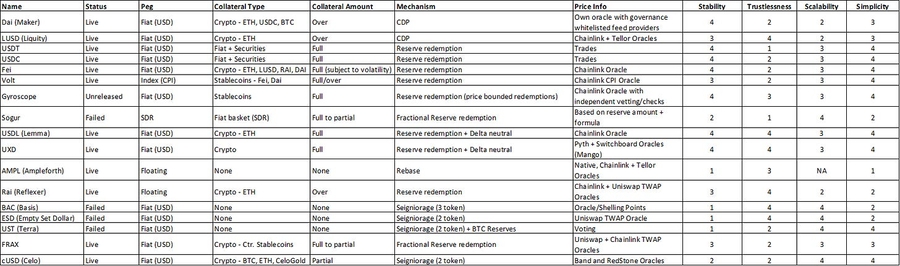

Osman Sarman, Mar 2022

This article is part of the Stablecoin Primer series.

Thinking about money by diedamla

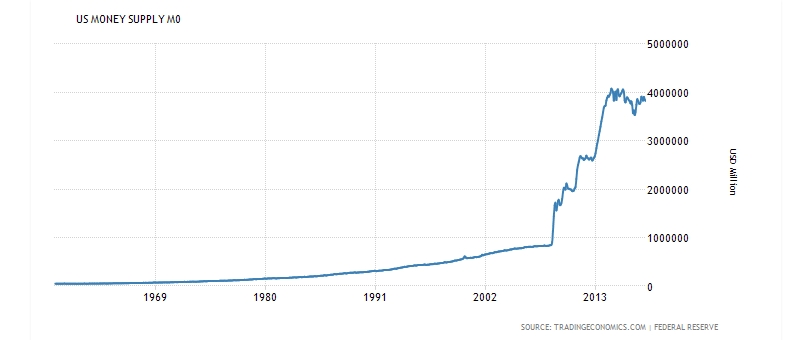

This year, when I went back home to Istanbul for the winter break, I was greeted by boxes of new stuff at my family house. I thought to myself, my years of begging for a New Year present is finally over. (Christmas is not a thing in Istanbul). A few minutes later, I found my dad hastily unpacking the same boxes, which contained a bunch of fitness and cooking equipment — and definitely not the headphones I was wishing for. So I immediately proceeded to ask him what he was up to. He looked at me with a half-smiling face and said: “You must have missed that Lira is 16, it’s not worth keeping my money in cash.”

Having freshly arrived from the US, what he said didn’t immediately make sense. After all, I was expecting him to make a comment about working out or cooking. It took me a full day of acclimatization and catching up with other family members and friends, and local business owners to grasp what he really meant. So let me try to clarify what my dad intended to say:

“Over the past month, Turkish Lira lost close to 42% of its value against the US Dollar, making the USDTRY exchange rate ~16. If I were to keep my hard earned money in my bank account in Liras, it would be like setting it on fire, throwing it into the ocean, or giving it out for free on the street. Instead, I have decided to buy a rowing machine and a slow cooker because I believe that these will have more use to me in the short term and more value to me in the future when I resell them, compared to the Lira.”

My dad is a reasonable person. He is not a finance guru or an econ wizard per se, but as a Board Certified clinical pathologist operating his own practice, he makes decisions that extend lives on a daily basis and puts his skin in the game (economy) as a business owner. So his reaction to my question made me think, a lot.

What does a slow cooker have to do with stablecoins? And pathology? Why is all of this relevant? Because this anecdote made me think about how abstract, macro-level monetary policies impact our lives so tangibly at the micro level. More specifically, while giving birth to this Primer, this anecdote made me question a few things:

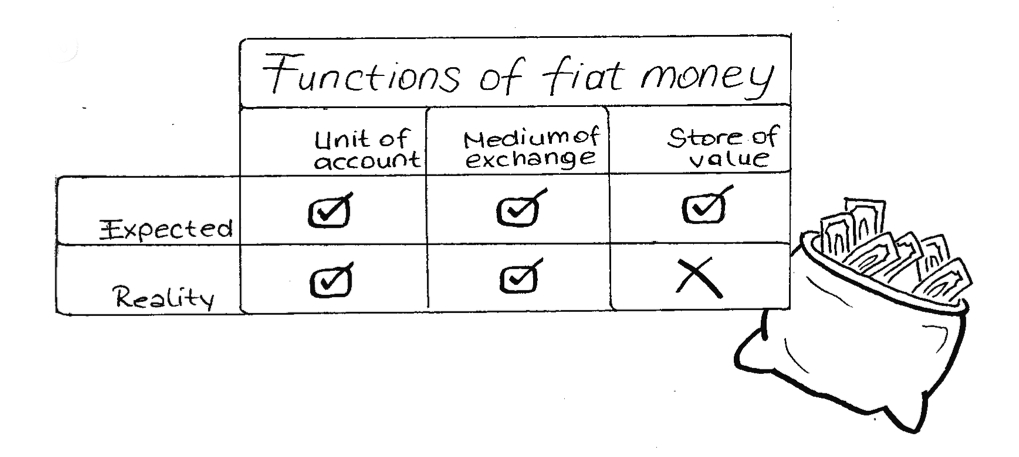

What do we instinctively use money for?

Why does as we know it, especially in certain parts of the world like Turkey, does not meet all these expected uses?

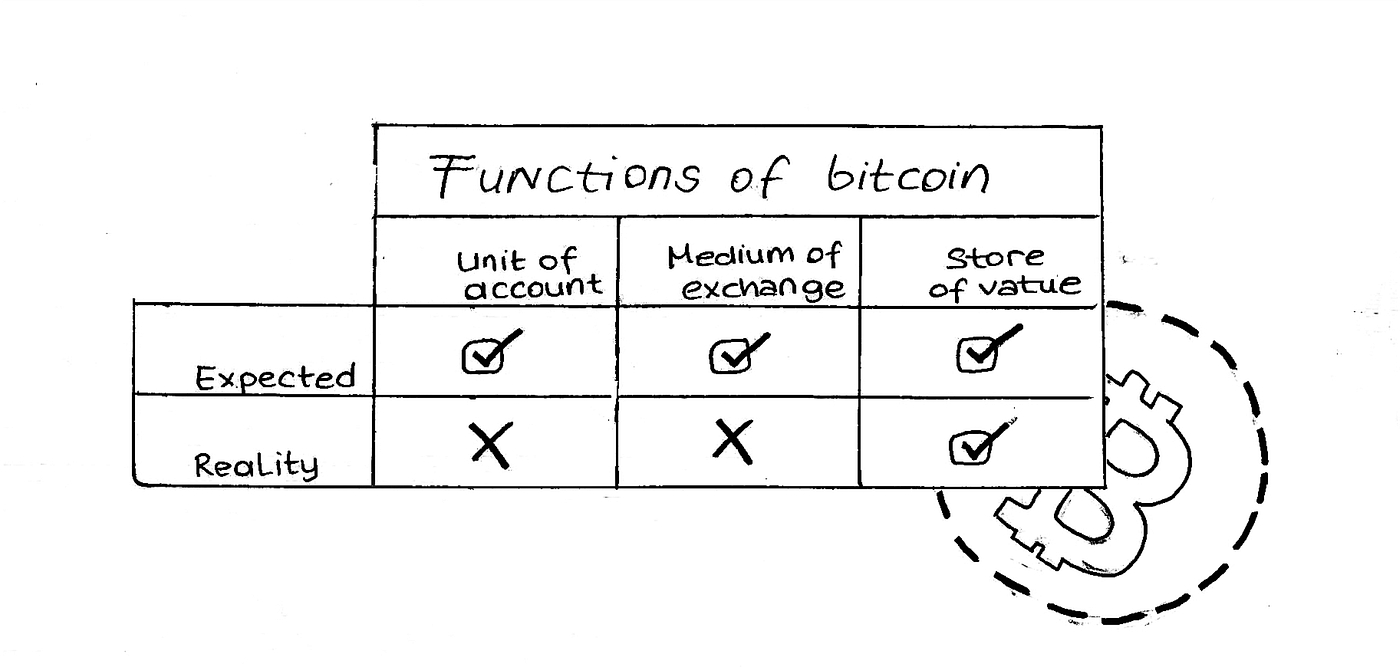

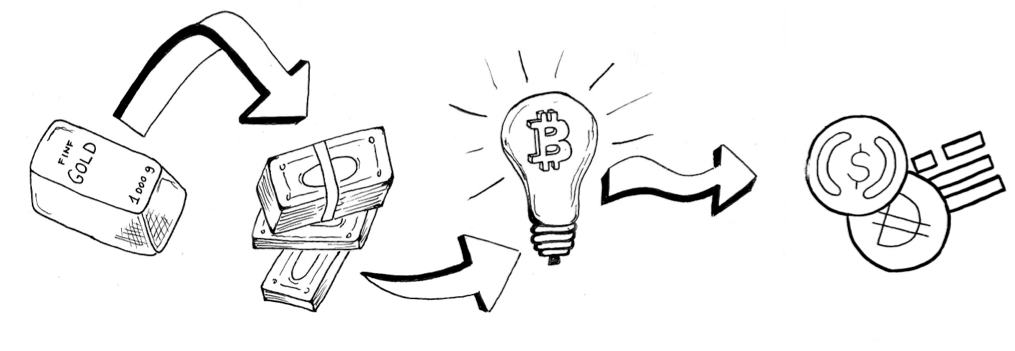

Why haven’t we already replaced fiat money with bitcoin, the promised peer-to-peer electronic cash?

If fiat and bitcoin do not meet all our money needs, could stablecoins be the last piece in the money puzzle?

Last piece in the money puzzle by

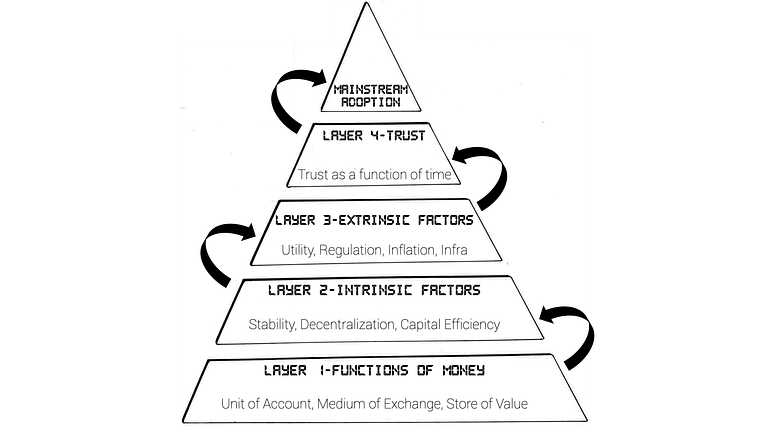

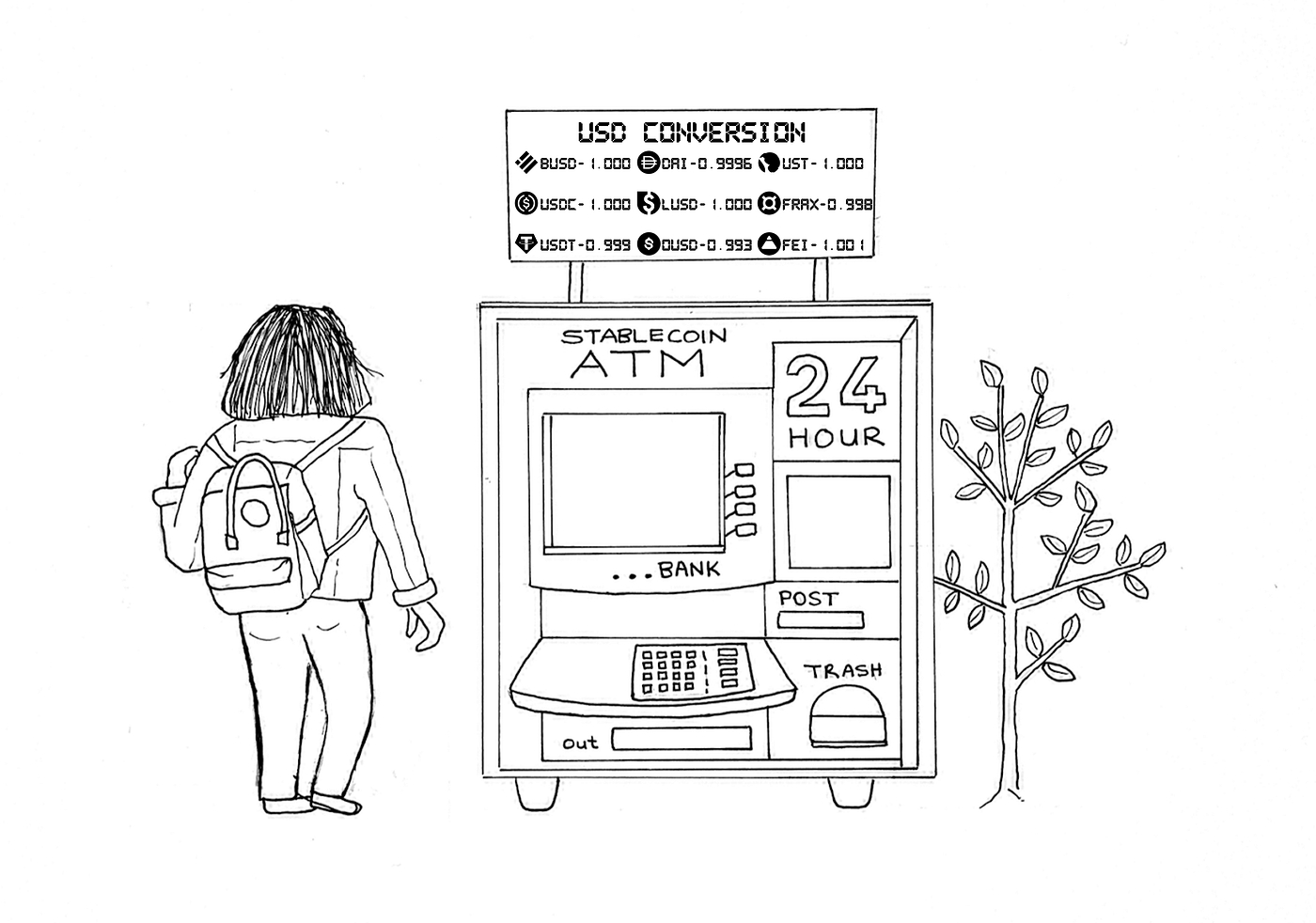

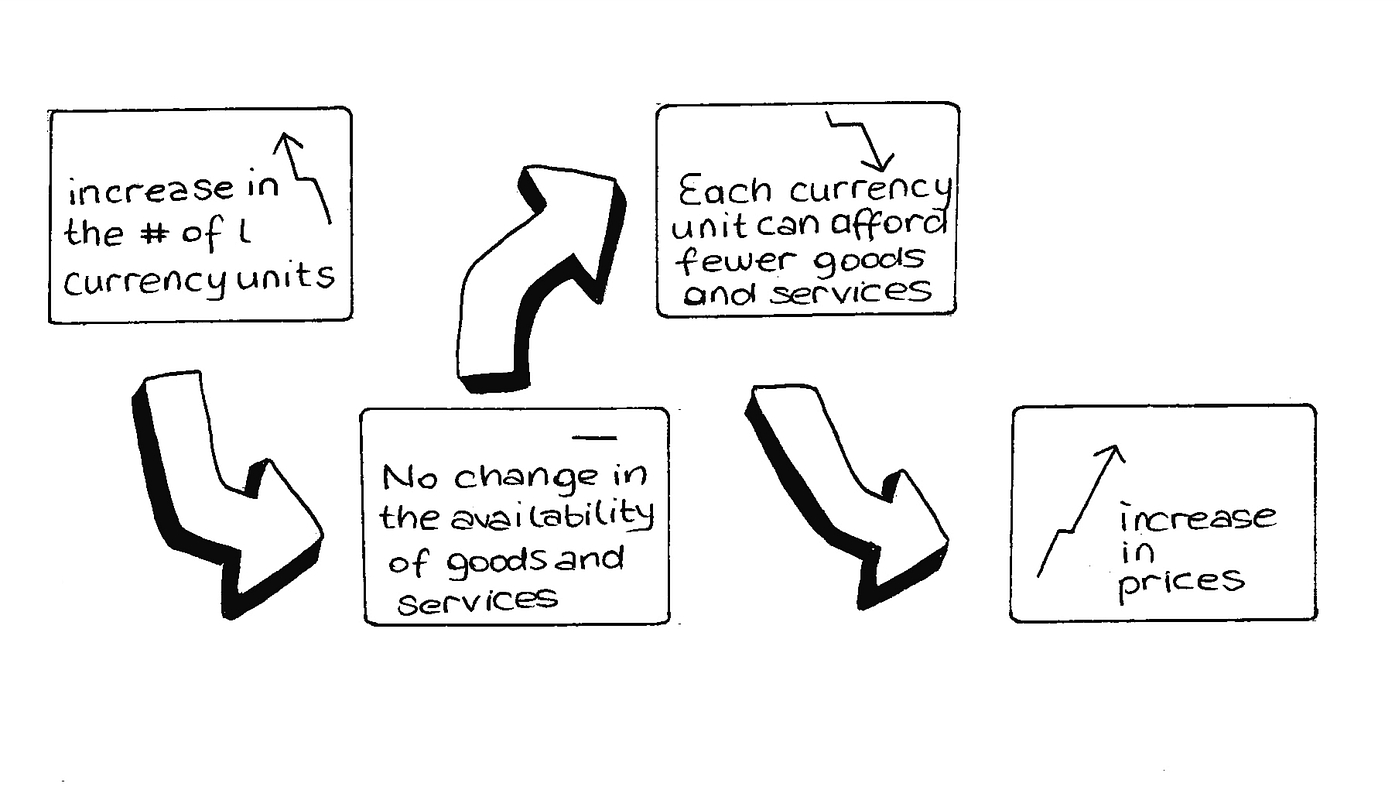

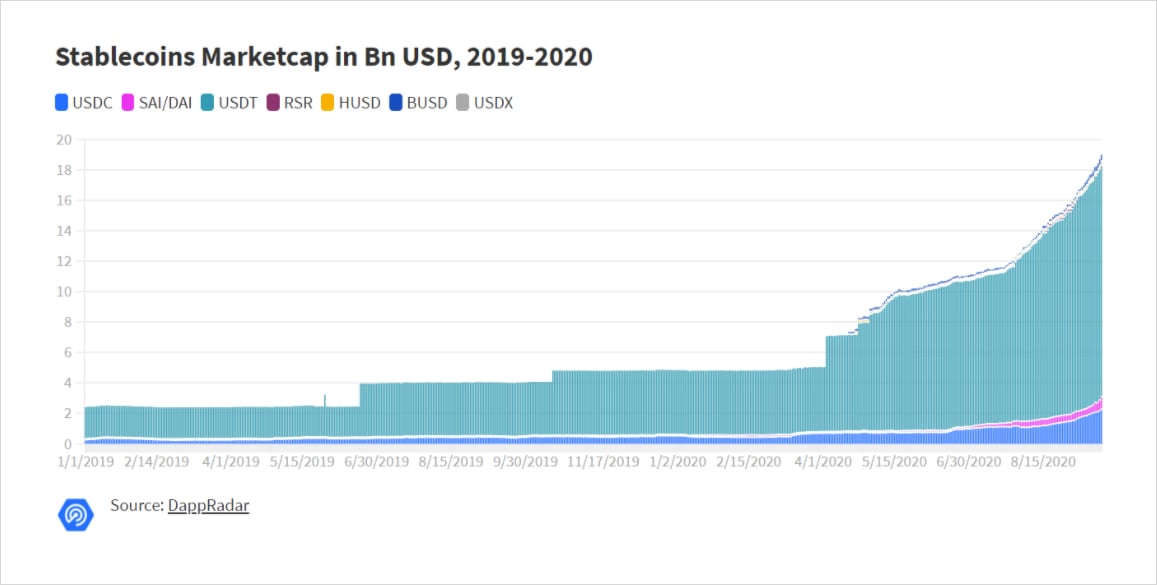

This Primer is ultimately a deep dive into stablecoins, with #4 above being the broader focus. However, to have a foundational understanding of the value propositions of stablecoins (whose supply grew 5x year-over-year to reach $181 billion), we first need to look at the problems they address. At the end of the day, like all tools and solutions, stablecoins were invented to address a specific need or problem we have. And if we are viewing stablecoins as an upgrade to the current forms of money, we are required to have an understanding of the issues regarding the latter. Along those lines, of the Primer focuses on fiat money’s inflation, bitcoin’s volatility, and an intro into stablecoins’ prowess.

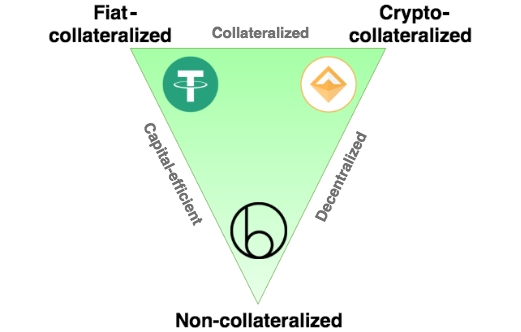

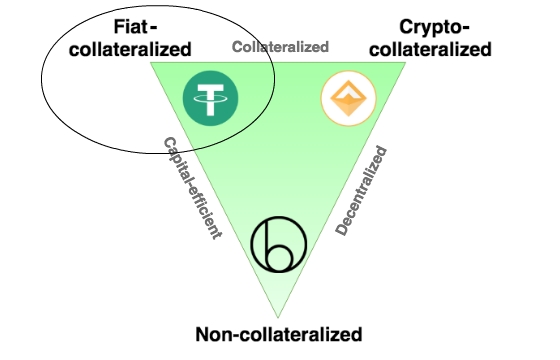

We then talk about the overall stablecoin landscape in Section 2. Here, we provide real life examples from around the world that highlight stablecoins’ strong product-market fit. Exploring further, we discuss the various ways people are using stablecoins. Building on the stablecoin lanscape discussion, in Section 3 we highlight the three broad types of stablecoins that exist and the different parameters each stablecoin type is optimized for.

In Section 4, we deep dive into a select set of stablecoin projects to shed light on how different stablecoins achieve stability and the trade-offs they make. Finally, we end the Primer in Section 5 by discussing the circumstances that need to be true for stablecoins to reach mass adoption. Per formality, here we also discuss risks associated with stablecoins.

https://medium.com/@MindWorksCap/liquid-staking-jostling-for-position-in-a-post-shanghai-ethereum-8e522c1841e6

MindWorks Capital, 12-Jan-23

The battle for staked Ether intensifies as withdrawals are slated to open in March after the Shanghai upgrade

Ethereum’s successful transition into Proof of Stake last year bid farewell to the Proof of Work miners and replaced them with Ether stakers who now secures the blockchain.

https://fehrsam.xyz/blog/value-of-the-token-model

Fred Ehrsam, Jan 2017

Since my on app coins (I think the more appropriate term is now tokens or blockchain tokens) we’ve seen projects use this model. The tide is turning. While of venture capital was invested in Bitcoin and blockchain based startups up through 2015, 2016 saw over $100m of crowdsourced, non-venture money fund 60+ projects.

As momentum has increased, there have been some intelligent critiques of the model which help to hone in on the value a token really provides. I especially enjoyed , where the author argues it’d be more efficient to port the smart contracts of a token to use Ether directly, eliminating the token. He argues this will happen because 1) it eliminates the cost to convert between Ether and tokens, and 2) Ether will be a “better money” than any token because it will be more broadly used, thus more stable, so people will prefer to just use Ether. I want to succinctly describe the core value of the token model and address some of the common critiques below.

Governance People will want to have governance over their own communities separate from the global governance of Ethereum (or any other base blockchain). A token is necessary for this sub-governance. Not having sub-governance would be like anyone who owns USD being able to walk into a Google shareholder meeting and voting without owning Google stock just because Google shares happen to be denominated in USD. Or like everyone in the US being able to vote on the bylaws of a social club in San Francisco, just because it happens to exist in the US.

https://www.michael.com/en/resources/bitcoin-mining-and-the-environment

Michael Saylor

To Journalists, Investors, Regulators, & Anyone Else Interested in Bitcoin & the Environment,

Thanks for your inquiry. I thought I would share a few high level thoughts on Bitcoin Mining & the Environment.

1. Bitcoin Energy Utilization: Bitcoin runs on stranded, excess energy, generated at the edge of the grid, in places where there is no other demand, at times when no one else needs the electricity. Retail & commercial consumers of electricity in major population areas pay 5-10x more per kwH (10-20 cents per kwH) than bitcoin miners, who should be thought of as wholesale consumers of energy (normally budgeting 2-3 cents per kwH). The world produces more energy than it needs, and approximately a third of this energy is wasted. The last 15 basis points of energy power the entire Bitcoin Network - this is the least valued, cheapest margin of energy left after 99.85% of the energy in the world is allocated to other uses.

2. Bitcoin vs. Other Industries: Bitcoin mining is the most efficient, cleanest industrial use of electricity, and is improving its energy efficiency at the fastest rate across any major industry. Our metrics show ~59.5% of energy for bitcoin mining comes from sustainable sources and energy efficiency improved 46% YoY. No other industry comes close (consider planes, trains, automobiles, healthcare, banking, construction, precious metals, etc.). The bitcoin network keeps getting more energy efficient because of the relentless improvement in the semiconductors (SHA-256 ASICs) that power the bitcoin mining centers, combined with the halving of bitcoin mining rewards every four years that is built into the protocol. This results in a consistent 18-36% improvement year after year in energy efficiency. More details on this are included in the

A token offers security to community governance. While anyone can buy a token with Ether, in order to meaningfully influence the governance of that token community they’d have to buy a lot, increasing the price drastically while doing so. Finally, if they voted on something bad for the community, they would be destroying the value of what they own.

It may be that the rules around some communities and their protocols can be static and thus don’t need governance — but I imagine most will want the option to evolve.

Monetary policy Ethereum may make monetary policy decisions like “let’s do 1% inflation to support the ongoing development of the Ethereum protocol.” A token built on Ethereum might want to do the same. And you’d want each to be able to do that independently. This is similar to what we are seeing with the Euro: you don’t necessarily want monetary union without fiscal union.

Aligning incentives Tokens align incentives between developers, contributors, users, and investors. They allow everyone who wants to contribute to a project early the opportunity to get in on the ground floor. This overcomes the classic chicken and the egg problem for systems based on network effects.

Addressing the original critiques “Just using Ether is more efficient because it eliminates the cost to convert between Ether and tokens”

The marginal cost argument for why it’s cheaper to do a contract on ETH is weak in my opinion. The cost of exchanging a token for ETH is already low at ~0.25% and will approach 0.

The more interesting economic force, in my opinion, is that Ether holders have a strong economic incentive to take any token protocol that works and port it directly to Ether. Let’s say Filecoin gets 30% of the Ethereum market cap. If Ether holders successfully port it — a simple matter of copying the code of the smart contracts — they absorb that value, and the value of their Ether goes up. So it seems like it will be tried. The question is whether or not it will work. In cases where a token is really needed for the reasons described above, I think the answer is no.

Separately, I wonder what happens here if/when tokens start to span multiple base blockchains. For example: what if Filecoin is its own virtualized blockchain, where its ledger state is maintained across blockchains. If that is possible, you can imagine a token not being dependent on any one blockchain.

“People will prefer use Ether instead of tokens because it will be more stable in value”

Holding/pricing in Ether instead of a token can be both a benefit and a drawback. On the benefit side, Ether may be more stable. On the drawback side, these communities may not want to be subject to changes in the price of Ether. This becomes especially true if tokens can start living across multiple base blockchains. In any case, I think this point is least important since holding tokens is not required because they can instantly be converted in/out of Ether.

Conclusion As with early internet startups, some token models don’t make sense. For every 1 huge hit there will be 3 minor successes and 100 failures, so we shouldn’t be surprised when some fail. However, the fundamentals of the token model are valuable and powerful. They allow communities to govern themselves, their economics, and rally a community in powerful ways that will allow open systems to flourish in a way that was previously impossible.

Thanks to Linda Xie.

3. Bitcoin Value Creation & Energy Intensity: Approximately $4-5 billion in electricity is used to power & secure a network that is worth $420 billion as of today, and settles $12 billion per day ($4 trillion per year). The value of the output is 100x the cost of the energy input. This makes Bitcoin far less energy intensive than Google, Netflix, or Facebook, and 1-2 orders of magnitude less energy intensive than traditional 20th century industries like airlines, logistics, retail, hospitality, & agriculture.

4. Bitcoin vs. Other Cryptos: The only proven technique for creating a digital commodity is Proof of Work (bitcoin mining) deployed in a fair, equitable fashion (i.e. no pre-mine, no ICO, no controlling foundation, no primary software development team, no series of forced hard fork upgrades that materially change the monetary protocol). If we remove the dedicated hardware (SHA-256 ASICs) and the dedicated energy that powers those mining rigs, we are left with a network secured by proprietary software running on generic computers. That places all security & control of the network in the hands of a small group of software developers, who must create virtual machines doing virtual work with virtual energy in a virtual world to create virtual security. All attempts to date have resulted in a digital asset that meets the definition of an investment contract (i.e. digital security, not digital commodity). They all pass the Howie test and therefore look more like equities than commodities.

Regulators & legal experts have noted on many occasions that Proof of Stake networks are likely securities, not commodities, and we can expect them to be treated as such over time. PoS Crypto Securities may be appropriate for certain applications, but they are not suitable to serve as global, open, fair money or a global open settlement network. Therefore, it makes no sense to compare Proof of Stake networks to Bitcoin. The creation of a digital commodity without an issuer that serves as “digital gold” is an innovation (we have accomplished this only once in the history of the world with Bitcoin). The creation of a digital security or digital coupon on a shared database is utterly ordinary (it has been done 20,000 times in the crypto world, and 100,000+ times in the traditional world).

5. Bitcoin & Carbon Emissions: 99.92% of carbon emissions in the world are due to industrial uses of energy other than bitcoin mining. Bitcoin mining is neither the problem nor the solution to the challenge of reducing carbon emissions. It is in fact a rounding error and would hardly be noticed if it were not for the competitive guerrilla marketing activities of other crypto promoters & lobbyists that seek to focus negative attention on Proof of Work mining in order to distract regulators, politicians, & the general public from the inconvenient truth that Proof of Stake crypto assets are generally unregistered securities trading on unregulated exchanges to the detriment of the retail investing public.

6. Bitcoin & Environmental Benefits: There is an increasing awareness that Bitcoin is quite beneficial to the environment because it can be deployed to monetize stranded natural gas or methane gas energy sources. Methane gas emissions' curtailment is particularly compelling and Dan Batten (https://batcoinz.com/) has written some impressive papers on this subject. It has also become clear that energy grids that rely primarily on sustainable power sources like wind, hydro, & solar can be unreliable at times due to lack of water, sunlight, or wind. In this case, they need to be paired with a large, flexible electricity consumer like a bitcoin miner in order to develop grid resilience & finance the buildout of additional capacity necessary to responsibly power major industrial/population centers. The recent example of major Bitcoin energy curtailment on the ERCOT grid in Texas is an example of the benefits of bitcoin mining to sustainable power providers. No other industrial energy consumer is so well suited to monetize excess power as well as curtail flexibly during periods of energy shortfall & production volatility.

7. Bitcoin & Global Energy: Bitcoin maximalists believe that Bitcoin is an instrument of economic empowerment for 8 billion people around the world. This is supported by the ability of a bitcoin miner to monetize any power source, anywhere, anytime, at any scale. Bitcoin mining can bring a clean, profitable and modern industry that generates hard currency to a remote location in the developing world, connected only via satellite link. All that is needed is some excess electricity generated from a waterfall, geothermal source, or miscellaneous excess energy deposit. Google, Netflix, and Apple won’t be setting up data centers in Central Africa that export services to their wealthy western clients anytime soon due to constraints on bandwidth, privacy, & requirements for consistent power flow, but bitcoin miners are not hampered by these constraints. They can utilize erratic power supplies with low bandwidth in remote locations and generate valuable bitcoin without prejudice, just as if they were in a suburb of NYC, LA, or SF. Even now, Bitcoin miners are everywhere and will continue to spread (though Africa, Asia, South America, etc.) wherever there is excess energy and anyone with aspirations for a better life. Bitcoin is an egalitarian financial asset offering financial inclusion to all, and bitcoin mining is an egalitarian technology industry offering commercial inclusion to anyone with the energy & engineering capability to operate a mining center.

Thank you for your interest, and best wishes with your article, research, legislation, or investments.

Michael Saylor Executive Chairman MicroStrategy

References:

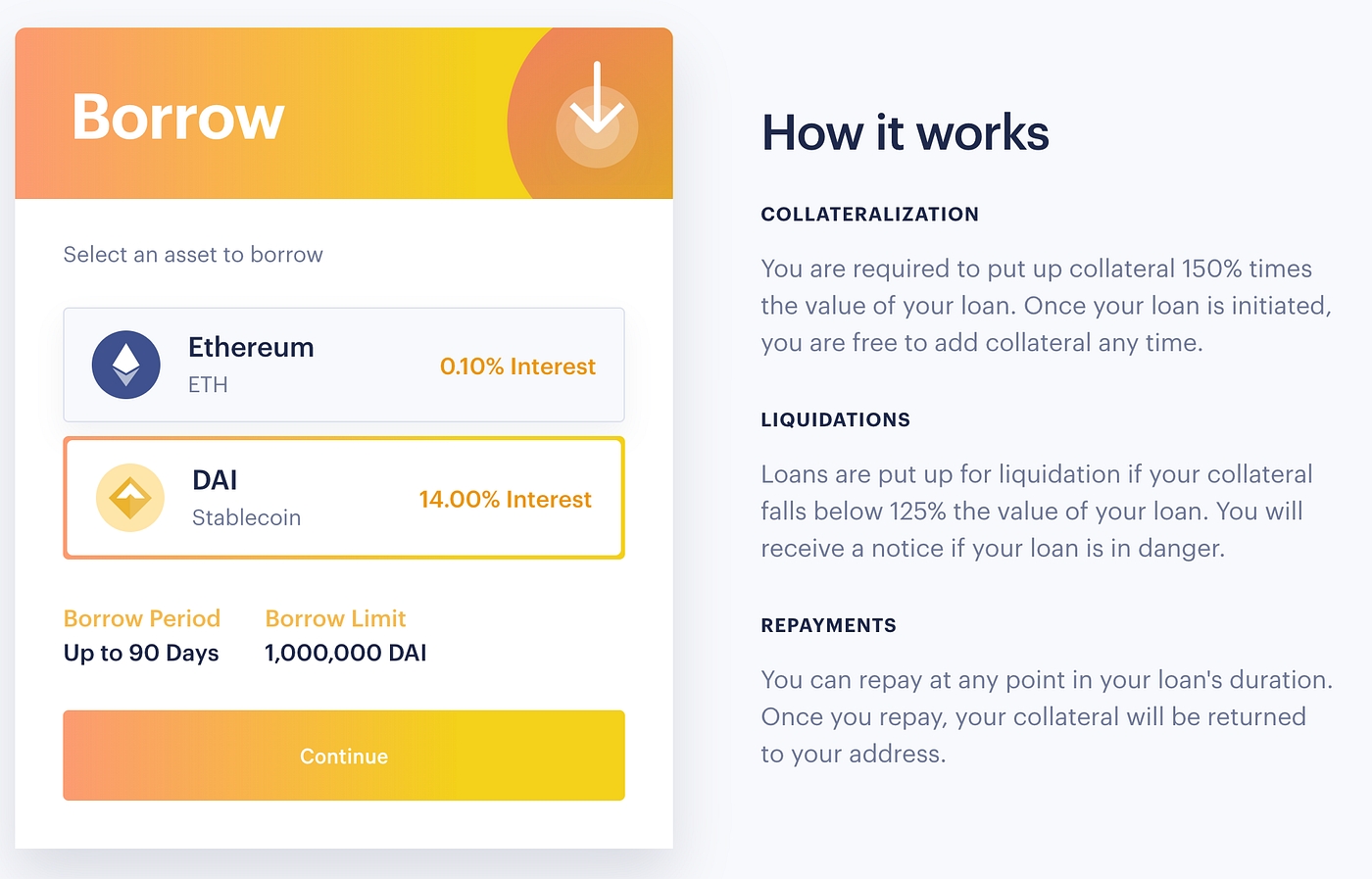

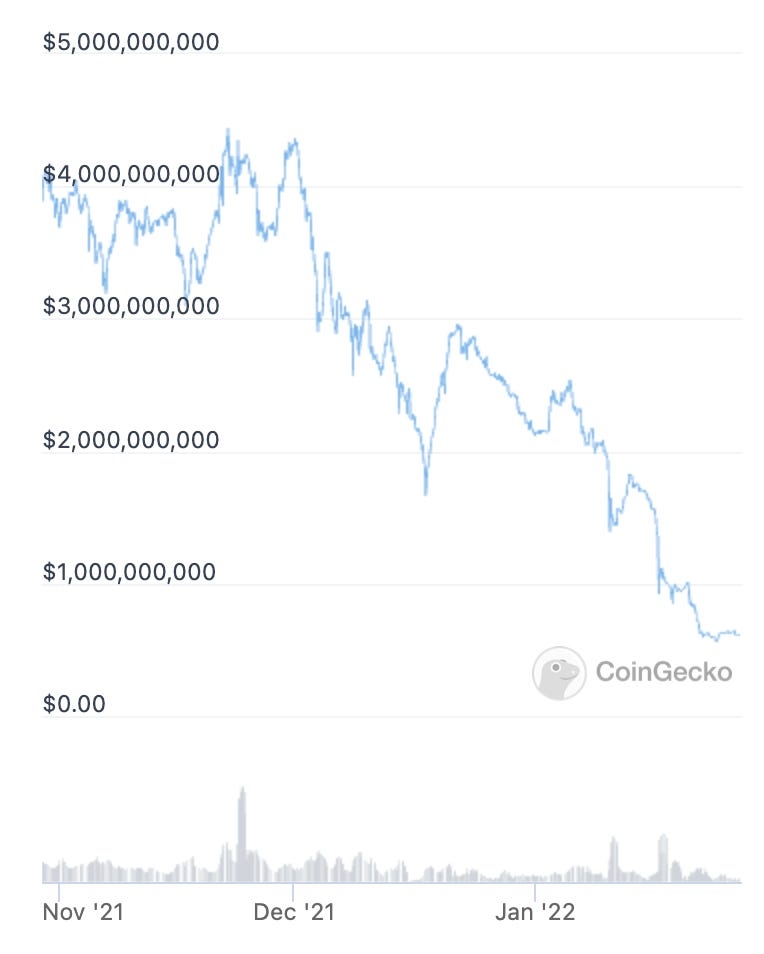

However, this could create a lot of barriers for ordinary users to participate in proof of stake as it requires a high upfront cost (32 ETH is currently ~$42,000 as of Jan 9th, 2023), high hardware requirements to run the software as well as the technical know-how to run the validator nodes.

Traditional staking is also hugely illiquid, as staked coins cannot be withdrawn immediately, even after the Shanghai upgrade. Instead, validators have to queue to exit the staking chain in order to preserve network security.

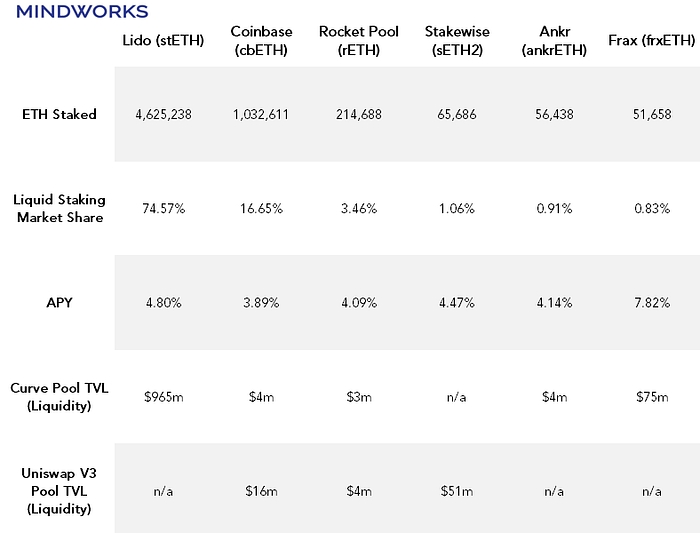

Because of the high barrier to entry and lack of convenience, liquid staking has become a hugely popular method for users to stake their ETH without having to run any software while maintaining liquidity. Lido, the largest Ethereum liquid staking provider, has over 4.6 million Ether staked on its platform accounting for 29% of all ETH staked.

Ethereum staking landscape is also poised to undergo a huge transformation after the Shanghai upgrade, which would be the next time developers upgrade the Ethereum network. Shanghai will be highly impactful for ETH staking as it would introduce the long-awaited feature -withdrawals. Before this upgrade, all ETH deposited onto the beacon chain to particpate in proof of stake has no way to be withdrawn back to the mainnet.

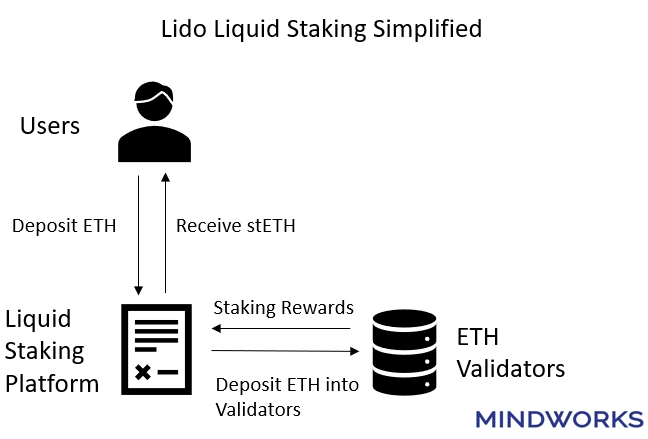

Liquid staking’s process is actually pretty simple. Users deposit tokens into a smart contract, which then are deposited into affiliated validators. Users will receive a token that represents the amount they have deposited into the liquid staking platforms.

Let’s use Lido as an example. Users deposit ETH into Lido and receive stETH in return. The stETH token represents the ETH that the user has deposited into Lido. The stETH token will automatically rebase and increase according to the rate of rewards earned by Lido affiliated validators. Users can also freely trade and transfer their stETH without being subjected to lockups.

You might be questioning at this point how liquid staking has seemingly solved all the problems that traditional staking has, with no upfront costs (besides purchasing the ETH), no hardware and software involved as well as preserving liquidity over the ETH. Liquid staking, in reality, has solved most of these problems although it does have several problems.

Firstly, liquid staking is not trustless. By participating in liquid staking, you are trusting the validators chosen by your liquid staking platform not to act maliciously as it could then be subjected to slashing, which would result in the ETH deposited being permanently lost.

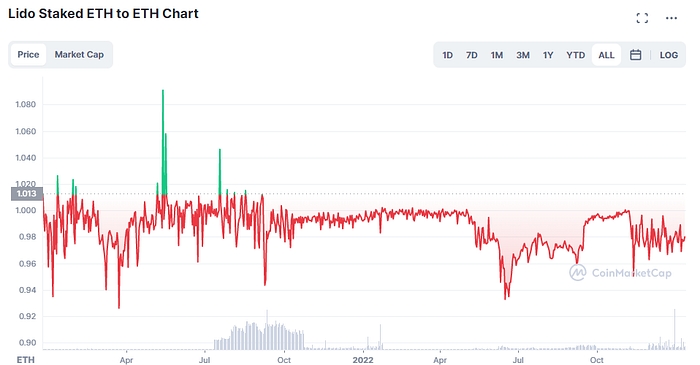

Secondly, the token you received in return for depositing, such as stETH, will not necessarily maintain its monetary value as you cannot currently withdraw it back into ETH and will need to trade it on the secondary market in order to convert it. In fact, stETH has consistently traded below peg since launch, so traders that have to exit quickly usually would face a small loss in terms of ETH.

Lastly, by overly relying on liquid staking platforms, Ethereum could become more centralized as one platform could reach a critical share of the overall validators. Some in the Ethereum community has already spoken out towards Lido’s dominance and whether it could potentially cause danger to Ethereum.

While liquid staking is available on most proof-of-stake blockchains, we want to focus on Ethereum as it is the largest proof-of-stake blockchain by market capitalization as well as with withdrawals opening as soon as March.

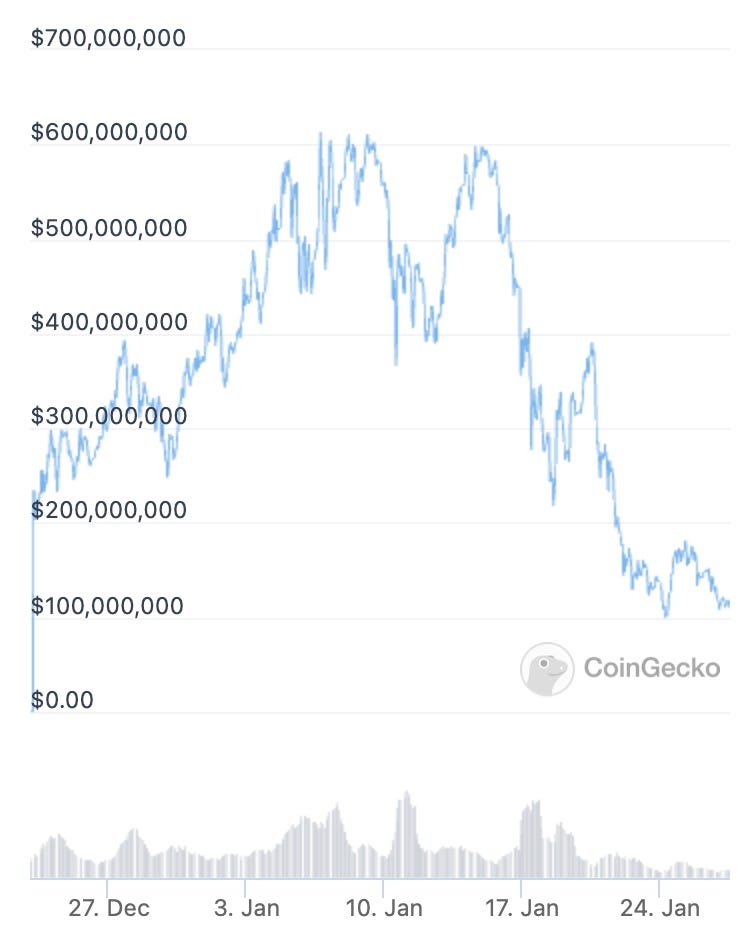

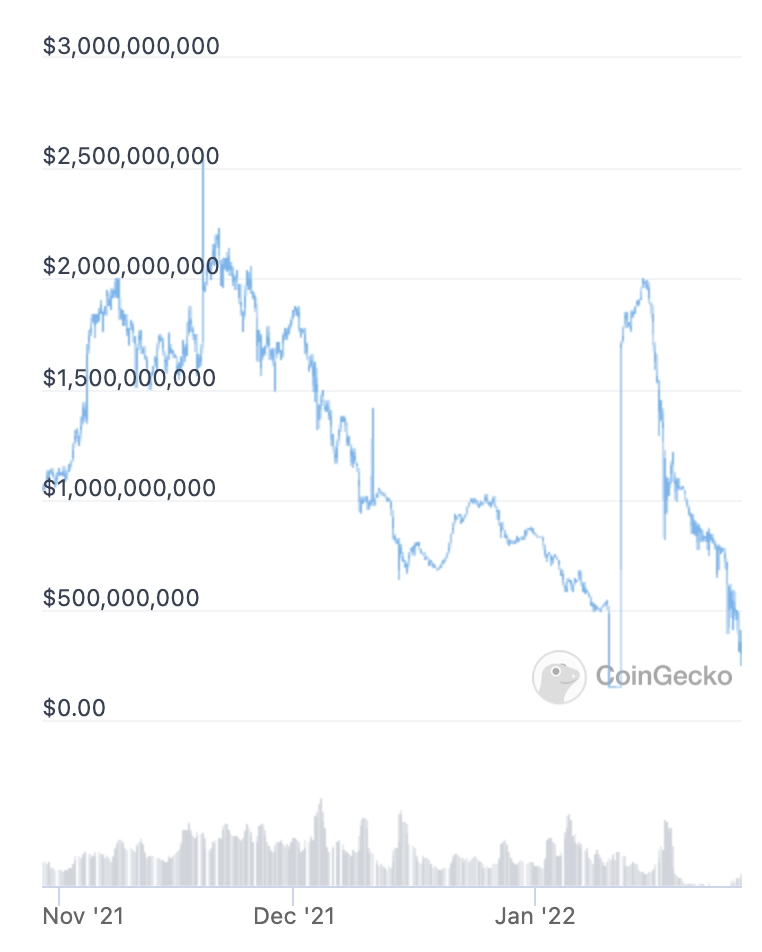

As we can see in the chart above, Lido currently dominates the Ethereum liquid staking market with 75% market share. A big contributor to this dominance is due to its advantage being the first to market, as well as higher adoption from other DeFi applications such as Aave providing it with more use cases.

While Lido currently has a stranglehold over liquid staking on Ethereum, the market is about to enter a new phase of adoption as ETH that are staked on the beacon chain could soon be withdrew provided that withdrawals are enabled by the Shanghai upgrade.

This means that ALL ETH currently staked could potentially be withdrawn and staked elsewhere, whether or not they are currently in liquid staking pools or at home solo staking.

Given the advantages of liquid staking mentioned above, in all likelihood we should see the amount of Ether staked in liquid staking increase as withdrawals open due to its superior user experience when compared to other solutions such as home staking and permissioned staking pools.

To understand Lido’s dominance, we need to understand why stETH is by far the most popular liquid staking derivative (LSD) on Ethereum.

Firstly, stETH has the deepest liquidity out of all the LSDs. According to DefiLlama, one could trade $500 million worth of ETH and still suffer less than 1% of slippage on its trade. Compared to cbETH, the second largest LSD by deposits, a trade of $10m to cbETH would likely suffer from a slippage larger than 1%. So, for large whales or crypto funds who is concerned about liquidity, stETH would likely be your only viable choice.

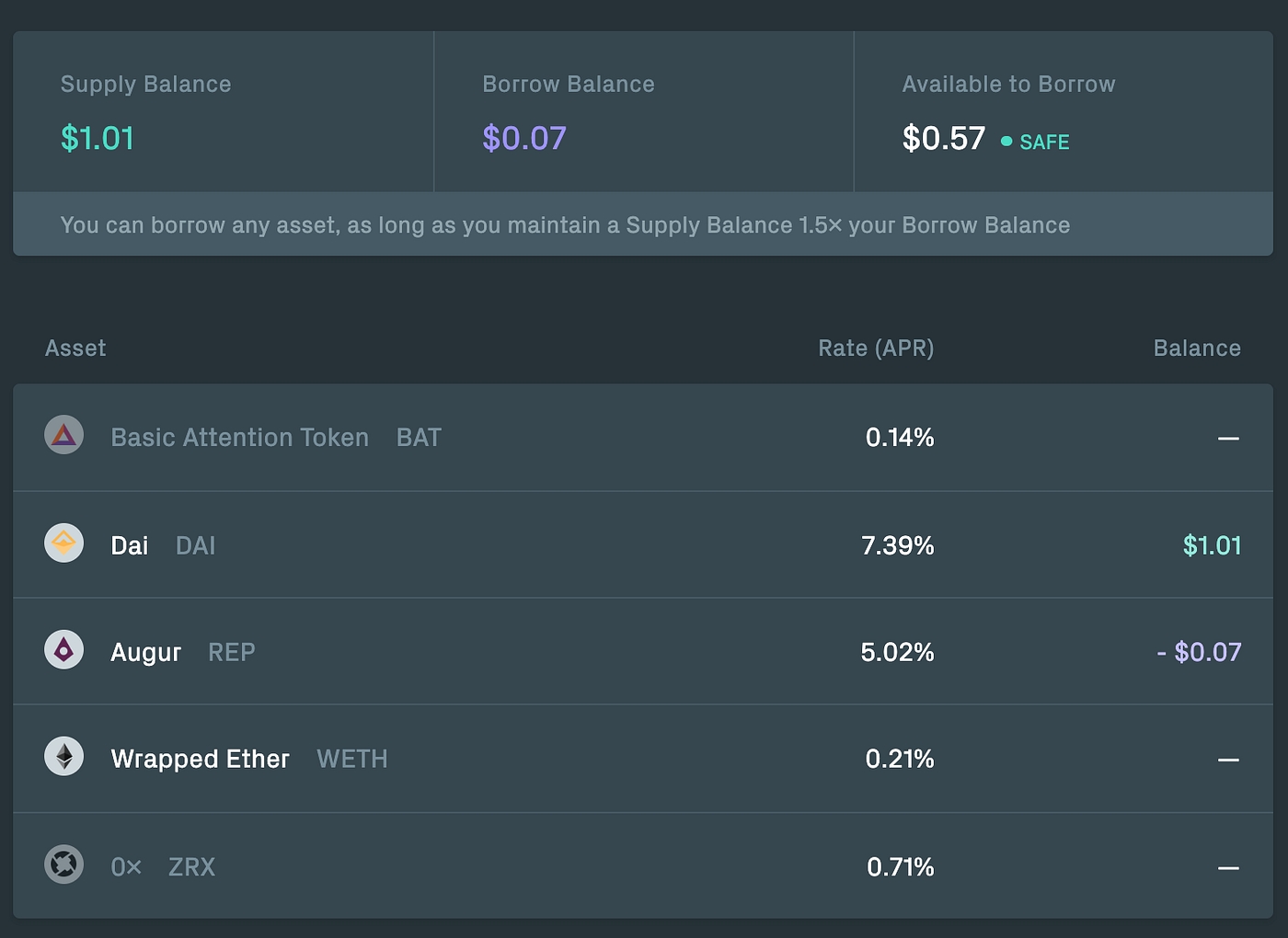

Secondly, use cases are key for LSDs. One of the key use cases stETH has that others doesn’t is leveraged staking. Leveraged staking is a process in which you use your LSD as collateral to borrow ETH, converting that into a LSD so that you could multiply the yield through leverage. Aave, the largest lending platform on Ethereum, only accepts stETH as the sole LSD on its platform. Meaning if you would like to do leverage staking on the most liquid lending platform, stETH is again your only viable option.

To answer the question, it is currently difficult to forecast anyone taking significant market share from Lido due to the aforementioned advantages.

That said, some of the competing protocols are innovating to attract stakers onto their platform. For example, RocketPool is putting decentralization onto the heart of their messaging, allowing anyone to run a validator on its protocol with 16ETH. Another competitor, Frax Finance, is leveraging its stablecoin protocol and innovative LSD token design to attract liquidity and increase staking APY for holders.

The Stakes are high for liquid staking protocols in a post Shanghai upgrade world, but the heightened competition should result in an overall better user experience and new use cases that will further enrich the DeFi landscape.

An innovation we would like to see is the enabling of leveraged staking on L2 networks. This would simultaneously increase the adoption for the L2 network and the liquid staking protocol, as well as enabling retail users to participate without being subject to high fees on the mainnet.

As we count down towards to Shanghai upgrade, we cannot wait to see the battle unfolds in a new chapter of the staking landscape on Ethereum.

Disclaimer: MindWorks Capital has not invested in any liquid staking platforms. Our team members hold ETH, cbETH, stETH and FXS. These statements are intended to disclose any conflict of interest and should not be misconstrued as a recommendation to purchase any token. This content is for informational purposes only, and you should not make decisions based solely on it. This is not investment advice.

https://www.coinbase.com/learn/market-updates/around-the-block-issue-14

Justin Mart and Ryan Yi, May 2021.

Where are all the DeFi insurance markets?

Insurance may not be the most exciting part of crypto, but it is a key piece that’s missing in DeFi today. The lack of liquid insurance markets prevents the maturation of DeFi and holds back additional capital from participating. Let’s take a look at why, and explore the different paths to providing insurance protection.

Insurance: The “so what?”

Insurance empowers individuals to take risks by socializing the cost of catastrophic events. If everyone was nakedly exposed to all of life’s risks, we would be much more careful. Readily available insurance coverage gives us confidence to deploy capital in emerging financial markets.

Let’s look at the relationship between risk and yield. If you squint, risk and yield are inextricably linked -- higher yields imply more implicit risk. At least, this is true for efficient and mature markets. While DeFi isn’t a mature market today, the significant yields are still an indication of higher latent risk.

Principally, this risk comes from the complexity inherent to DeFi and programmatic money. HIdden bugs-in-the-code are nightmare fuel for investors. Even worse, quantifying this risk is a mix of rare technical skill combined with what seems like black-magic guesses. The industry is simply too nascent to have complete confidence in just how risky DeFi really is. This makes insurance even more critical.

Clearly, strong insurance markets are a critical missing primitive and would unlock significant new capital if solved. So why haven’t we seen DeFi insurance markets at scale?

There are a few challenges in sourcing liquidity:

Who acts as underwriter, and how is risk priced? No matter the model, someone has to underwrite policies or price insurance premiums. Truth is, nobody can confidently assess the risks inherent in DeFi, as this is a new field and protocols can break in unexpected ways. The best indication of safety may well be the Lindy Effect -- the longer protocols survive with millions in TVL (total value locked), the safer they are proven to be.

Underwriter yield must compete with DeFi yield. When DeFi yields are subsidized by yield farming, even “risk-adjusted” positions often favor participating directly in DeFi protocols instead of acting as an underwriter or participating in insurance markets.

Yield generation for underwriters is generally limited to payments on insurance premiums. Traditional insurance markets earn a majority of revenue from re-investing collateral into safe yield-generating products. In DeFi what is considered a “safe” investment for pooled funds? Placing them back in DeFi protocols re-introduces some of the same risks they are meant to cover.

And there are a few natural constraints on how to design insurance products:

Insurance markets need to be capital efficient. Insurance works best when $1 in a pool of collateral can underwrite more than $1 in multiple policies covering multiple protocols. Markets that do not offer leverage on pooled collateral risk capital inefficiency, and are more likely to carry expensive premiums.

Proof of loss is an important guardrail. If payouts are not limited to actual losses, then unbounded losses as a result of any qualifying event can bankrupt an entire marketplace.

These are just some of the complications, and there is clearly a lot of nuance here. But given the above we can start to understand why DeFi insurance is such a challenging nut to crack.

So what are the possible insurance models, and how do they compare?

We can define different models by looking at key parameters:

Discrete policies or open markets: Policies that provide cover for a discrete amount of time and with well-defined terms, or open markets that trade the future value of a token or event? These coincide with liquid vs locked-in coverage.

On-chain or off-chain: Is the insurance mechanic DeFi native (and perhaps subject to some of the same underlying risks!) or more traditional with structured policies from brick-and-mortar underwriters?

Resolving claims: How are claims handled, and who determines validity? Are payouts manual, or automatic? If coverage is tied to specific events, be careful to note the difference between economic and technical failure, where faulty economic designs may result in loss even if the code operated as designed.

Let’s look at a few of the leading players to see how they stack up:

Hybrid insurance markets:

Straddling the DeFi and traditional markets, Nexus Mutual is a real Insurance Mutual (even requiring KYC to become a member), and offers traditional insurance contracts with explicitly defined coverage terms for leading DeFi protocols. Claim validity is determined by mutual members, and they use a pooled-capital model for up to 10x capital efficiency.

This model clearly works, and they carry the most coverage in DeFi today with $500M in TVL underwriting $900M in coverage, but still pales in comparison to the $50B+ locked in DeFi today.

Prediction markets and futures contracts: and

Bundling these models together, there are several projects building either prediction markets or futures contracts, both of which can be used as a form of insurance contracts.

In the case of futures contracts, short selling offers a way to hedge the price of tokens through an open market. Naturally, futures contracts protect against pure price risk, paying out if the spot price declines beyond the option price at expiry. This includes the whole universe of reasons why a token price could decline, which includes exploits and attacks.

Prediction markets are a kind of subset of options markets, allowing market participants to bet on the likelihood of a future outcome. In this case, we can create markets that track the probability of specific kinds of risks, including the probability that a protocol would be exploited, or the token price would decline.

Both options and prediction markets are not targeting insurance as a use case, making these options more inefficient than pure insurance plays, generally struggling with capital efficiency (with limited leverage or pooled models today) and inefficient payouts (prediction markets have an oracle challenge).

Automated insurance markets:

Exploits in DeFi protocols are discrete attacks, bending the code to an attacker’s favor. They also leave an imprint, stranding the state of the protocol in a clearly attacked position. What if we can develop a program that checks for such an attack? These programs could form the foundation for payouts on insurance markets.

This is the fundamental idea behind Risk Harbor. These models are advantageous, given that payouts are automatic, and incentives are aligned and well understood. These models can also make use of pooled funds, enabling greater capital efficiency, and carry limited to no governance overhead.

However, it may be challenging to design such a system. As a thought experiment, if we could programmatically check if a transaction results in an exploit, why not just incorporate this check into all transactions up front, and deny transactions that would result in an exploit?

Tranche-based insurance:

DeFi yields can be significant, and most users would happily trade a portion of their yield in return for some measure of protection. Saffron pioneers this by letting users select their preferred risk profile when they invest in DeFi protocols. Riskier investors would select the “risky tranche” which carries more yield but loses out on liquidation preferences to the “safe tranche” in the case of an exploit. In effect, riskier participants subsidize the cost of insurance to risk averse participants.

Traditional insurance

For everything else, traditional insurance companies are underwriting specific crypto companies and wallets, and may someday begin underwriting DeFi contracts. However this is usually rather expensive, as these underwriters are principled and currently have limited data to properly assess the risk profiles inherent to crypto products.

The fundamental challenges around pricing insurance coverage, competing with DeFi yields, and assessing claims, in combination with limited capital efficiency, has kept insurance from gaining meaningful traction to date.

These challenges collectively result in the largest bottleneck: capturing enough underwriting capital to meet demand. With $50B deployed in DeFi, we clearly need both a lot of capital and capital efficient markets. How do we solve this?

One path could be through protocol treasuries. Most DeFi projects carry significant balance sheets denominated in their own tokens. These treasuries have acted as pseudo-insurance pools in the past, paying out in the event of exploits. We can see a future where this relationship is formalized, and protocols choose to deploy a portion of their treasury as underwriting capital. This could give the market confidence to participate, and they would earn yield in the process.

Another path could be through smart contract auditors. As the experts in assessing risk, part of their business model could be to charge an additional fee for their services, and then back up their assessments by committing proceeds as underwriting capital.

Whatever the path, insurance is both critical and inevitable. Current models may be lacking in some areas, but will evolve and improve from here.

https://insights.flagshipadvisorypartners.com/insights/crypto-starting-to-realize-its-promise-in-payments

Anupam Majumdar and Dan Carr, Feb 2022